Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Machine Learning

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Machine Learning

- Run one workflow dynamically with different parame...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Run one workflow dynamically with different parameter and schedule time.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-19-2023 02:59 AM

Can we run one workflow for different parameters and different schedule time. so that only one workflow can executed for different parameters we do not have to create that workflow again and again. or we can say Is there any possibility to drive workflow dynamically?

Labels:

6 REPLIES 6

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-19-2023 08:56 AM

Assuming by workflow, you mean jobs then yes for parameters using the jobs api

https://learn.microsoft.com/en-us/azure/databricks/dev-tools/api/2.0/jobs

Scheduling is a different matter. This may be possible through the spark_submit_params that the API exposes but not something i have tried. I ended up creating a scheduling engine outside of Databricks which is called by Data Factory which works out what to execute at a given invocation time

The parameter values to be provided to the Jobs API are passed from the scheduling engine into Databricks by Data Factory which will then override the parameter values stored at the Job level.

I am also assuming the underlying code is generic and will run based on the parameter values provided...

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-28-2023 01:57 PM

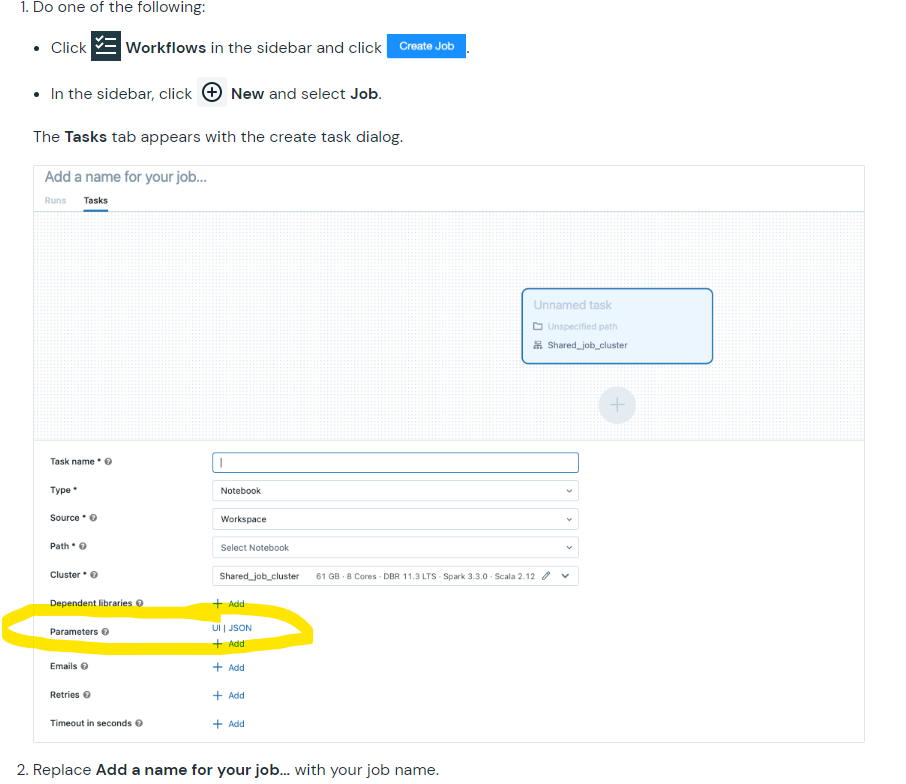

Hi, if you using databricks. can you to take a jobs.

https://docs.databricks.com/workflows/jobs/jobs.html

Ex: Can you crate a notboook with variables parameters .

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-28-2023 09:53 AM

Could someone please provide working example on the CLI side using run-now command with JSON job parameters? Is this a bug within the CLI?

I am experience similar problem with the post below and currently on CLI v0.2

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-29-2023 03:23 AM

Hi @DBXC, Did you check these docs?

- [Docs: jobs-2.0-api](https://docs.databricks.com/workflows/jobs/jobs-2.0-api.html)

- [Docs: jobs-cli](https://docs.databricks.com/archive/dev-tools/cli/jobs-cli.html)

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-29-2023 02:10 PM

Update / Solved:

Using CLI on Linux/MacOS:

Send in the sample json with job_id in it.

databricks jobs run-now --json '{

"job_id":<job-ID>,

"notebook_params": {

<key>:<value>,

<key>:<value>

}

}'

Using CLI on Windows:

Send in the sample json with job_id in it and sringify the json as below

databricks jobs run-now --json '{ \"job_id\":<job-ID>, \"notebook_params\": { <key>:<value>, <key>:<value> } }'

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-29-2023 02:10 PM

Update / Solved:

Using CLI on Linux/MacOS:

Send in the sample json with job_id in it.

databricks jobs run-now --json '{

"job_id":<job-ID>,

"notebook_params": {

<key>:<value>,

<key>:<value>

}

}'

Using CLI on Windows:

Send in the sample json with job_id in it and sringify the json as below

databricks jobs run-now --json '{ \"job_id\":<job-ID>, \"notebook_params\": { <key>:<value>, <key>:<value> } }'

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Custom ENUM input as parameter for SQL UDF? in Data Engineering

- {{job.trigger.type}} not working and throws error on Edit Parameter from Job page in Data Engineering

- Passing Parameters from Azure Synapse in Data Engineering

- Databricks SDK for Python: Errors with parameters for Statement Execution in Data Engineering

- DevOps Asset Bundle Deployment to Change the Catalog a Job Writes to in Data Engineering