Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Understanding Used Memory in Databricks Cluster

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Understanding Used Memory in Databricks Cluster

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-21-2023 08:39 PM

Hello,

I wonder if anyone could give me any insights regarding used memory and how could I change my code to "release" some memory as the code runs. I am using a Databricks Notebook.

Basically, what we need to do is perform a query, create a spark sql dataframe and convert to Pandas (yes, I know pandas is not the best but it will have to do for now). The way the code is setup is in a form of a loop that calls a function that performs the steps for each query as according to the user request. The number of queries may vary.

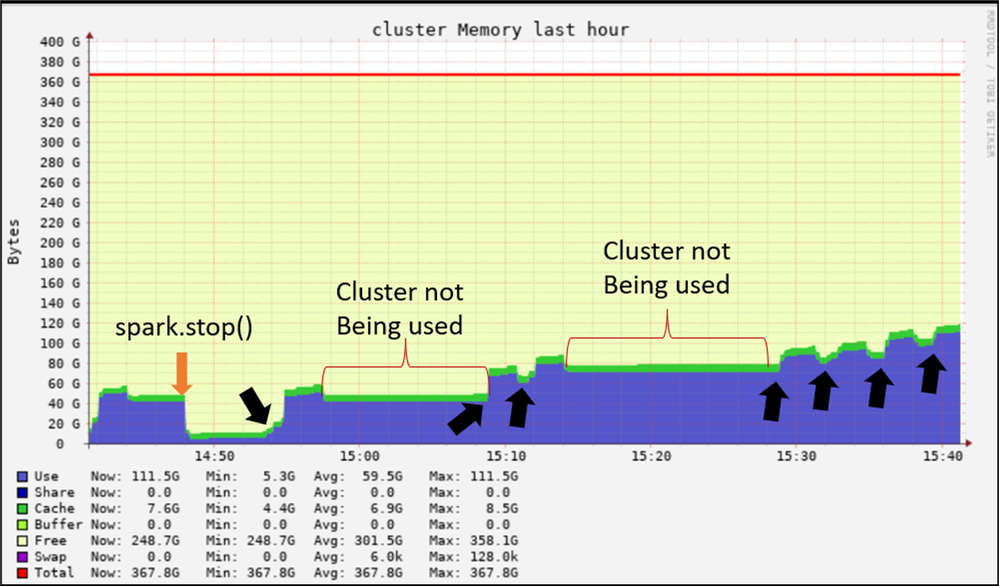

My question is, every time the function is called, I see an increase in memory used. I was expecting that the memory would go down after a loop is finished, but it doesn't. It just keep going up and is never released. It seems like when it reaches about 100-120Gb, then it starts to peak and go back to the 100Gb baseline. Here's a minimal example:

import pandas as pd

def create_query(query):

query_spark_df = spark.sql(query)

query_df_pd = query_spark_df.toPandas()

query_df_pd['col1'] = query_df_pd['col1'].astype('Int16')

query_df_pd['col2'] = query_df_pd['col2'].astype('int16')

query_df_pd['col3'] = query_df_pd['col3'].astype('int32')

#some other preprocessing steps

#

#

return query_df_pd

query_list = [query_1, query_2]

for query in query_list:

test = create_query(query)I tried:

- Delete variables using del and gc.collect()

- After being used, I tried to assign other values to the same variables, such as query_spark_df = []

- I tried some spark options such as .unpersist(blocking = True) and spark.catalog.clearCache()

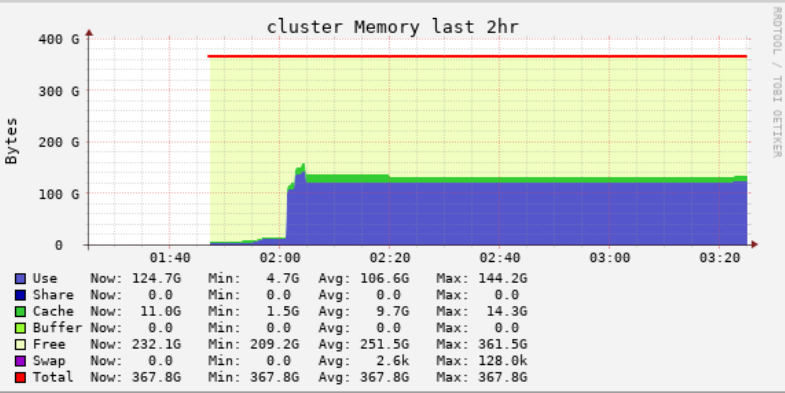

The problem is that after one day of users and us using the cluster, we have something that looks like this:

Is there any spark option that I could add to my "loop" (or at least to the end of my code) that would clear up the used memory? As I mentioned, GC collect has no effect. Should I use the functions differently? Any Python best practice that I am missing? Any help is appreciated.

Labels:

- Labels:

-

Databricks Cluster

2 REPLIES 2

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-15-2023 11:03 AM

@Juliana Negrini - could you please call the below method multiple times until you see the memory getting decreased to clear the memory?

def NukeAllCaching(tableName: Option[String] = None): Unit = {

tableName.map { path =>

com.databricks.sql.transaction.tahoe.DeltaValidation.invalidateCache(spark, path)

}

spark.conf.set("spark.databricks.io.cache.enabled", "false")

spark.conf.set("spark.databricks.delta.smallTable.cache.enabled", "false")

spark.conf.set("spark.databricks.delta.stats.localCache.maxNumFiles", "1")

spark.conf.set("spark.databricks.delta.fastQueryPath.dataskipping.checkpointCache.enabled", "false")

com.databricks.sql.transaction.tahoe.DeltaLog.clearCache()

spark.sql("CLEAR CACHE")

sqlContext.clearCache()

}

NukeAllCaching()The above solution is a kind of patch that requires to be called multiple times. The ideal way is to analyze your code if it is written efficiently in terms of performance, memory usage, and usability.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-15-2023 11:10 AM

@Juliana Negrini - with respect to the your sample code, you can use spark's distributed query capabilities to run the query using spark instead of pandas. so, you don't have to toggle between the pandas data frame and the spark data frame.

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Performance Issue with XML Processing in Spark Databricks in Data Engineering

- Optimal Cluster Configuration for Training on Billion-Row Datasets in Machine Learning

- Run failed with error message ContextNotFound in Data Engineering

- Databricks Model Registry Notification in Data Engineering

- Unit Testing with the new Databricks Connect in Python in Data Engineering