- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-17-2025 06:09 AM - edited 06-17-2025 06:11 AM

Hi Databricks Community. I need some suggestions on my issue. Basically we are using databricks asset bundle to deploy our forecasting repo and using aws nodes to run the forecast jobs. We built proper workflow.yml file to trigger the jobs.

- I am using single node cluster because currently our forecasting module is pandas based only (no spark or distribution but we are using joblib parallel).

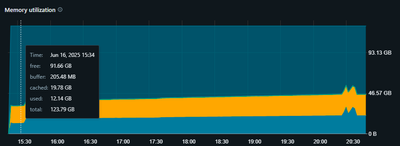

- Right now we've used r6i.xlarge node which is (32 GB & 4 cores). When we are running using this node, our code is do utilizing 28 - 30 GB and keeping remaining as free. This job took 15 hours to complete.

- Now, I've switched to r6i.4xlarge (128 GB & 64 cores) and I am expecting, it will run more faster as early with r6i.xlarge, BUT WHAT I OBSERVED is it's still taking around 30-31 GB only and other 90 GB is free. What I am expecting is it should expand and completes the job more faster.

Below is my workflow and cluster settings being used. Let me know if there is something needs to be change or tuned. Tagging @Shua42 , because you also helped me before. Thanks in advance.

dev:

resources:

clusters:

dev_cluster: &dev_cluster

num_workers: 0

kind: CLASSIC_PREVIEW

is_single_node: true

spark_version: 14.3.x-scala2.12

node_type_id: r6i.4xlarge

custom_tags:

clusterSource: ts-forecasting-2

ResourceClass: SingleNode

data_security_mode: SINGLE_USER

enable_elastic_disk: true

enable_local_disk_encryption: false

autotermination_minutes: 20

docker_image:

url: "*****.amazonaws.com/dev-databricks:retailforecasting-latest"

aws_attributes:

availability: SPOT

instance_profile_arn: ****

ebs_volume_type: GENERAL_PURPOSE_SSD

ebs_volume_count: 1

ebs_volume_size: 50

spark_conf:

spark.databricks.cluster.profile: singleNode

spark.memory.offHeap.enabled: false

spark.driver.memory: 4g

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-22-2025 11:34 PM

Hi @harishgehlot_03

Good day!

May I know what the time was in the second case using a r6i.4xlarge instance type?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-22-2025 11:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-24-2025 02:14 AM

Hi @Raghavan93513 , Let me know if any spark.conf I can set or something else which will help me to utilize more proportion of memory instead of limiting itself. Note: this is pandas workflow (not using spark till now)