Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Technical Blog

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Technical Blog

- Maximizing Resource Utilisation with Cluster Reuse

Sanjeev_Kumar

New Contributor III

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

03-25-2024

06:40 AM

Welcome to the fourth instalment of our blog series exploring Databricks Workflows, a powerful product for orchestrating data processing, machine learning, and analytics pipelines on the Databricks Data Intelligence Platform.

In our previous blog: Basics of Databricks Workflows - Part 3: The Compute Spectrum, we delved into the various Databricks compute options and shared guidelines for configuring resources based on the workloads. In this blog, we'll delve into cluster reuse, a crucial feature that enhances resource utilization and streamlines workflow execution.

Table of Contents

- Table of Contents

- Feature: Cluster Reuse

- What is it?

- Why use it?

- When to use it?

- Alternative to Cluster reuse

- Employ Cluster Reuse

- Using UI - Modifying tasks in an existing job

- Using UI - Creating a new job cluster for re-use

- Programmatically

- Considerations

- Conclusion

Feature: Cluster Reuse

What is it?

Cluster reuse in Databricks Workflows is a feature that allows for more efficient use of compute resources when running multiple tasks within a job leading to cost-savings.

Previously, each task within a Databricks job would spin up its own cluster, adding time and cost overhead due to cluster startup times and potential underutilization during task execution. Now, with cluster reuse, a single cluster can be shared across multiple tasks within the same job. This provides better and more efficient resource utilization and minimizes the time taken for tasks to commence. In other words, by utilizing cluster reuse, you can reduce the overhead of creating and terminating clusters within a job.

Let’s explore this feature in more detail by revisiting the use-case outlined in one of our earlier blog posts in this series: Basics of Databricks Workflows - Part 1: Creating your pipeline.

Example Use Case: Product Recommender

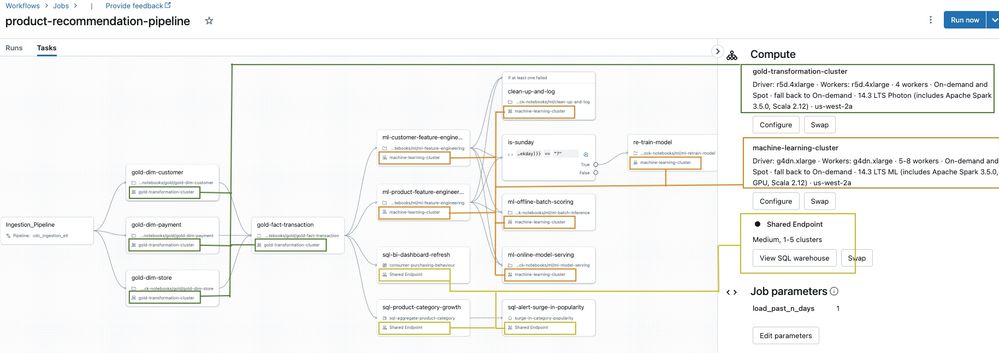

A retailer aims to create a personalized shopping experience by implementing a recommender system. The Data Engineering team has established a robust data pipeline, while the Data Analysts utilize dashboards and SQL reports to gain insights into customer behavior. The Data Science and ML team focuses on dynamic profiling, model retraining, and real-time recommendations.

Below we explain how cluster reuse is being leveraged by the three sections in the example workflow: data engineering, data warehousing & BI, and machine learning.

- Data Engineering: Data Engineers can build DLT pipelines or leverage Notebooks for their ETL. The first task is an ETL process performed by a DLT pipeline. One of the many advantages of DLT is that it provides and optimizes for cluster reuse automatically. DLT will spin up its own job cluster, reuse it for all tasks and shut it down as soon as all of its tasks finish. Since the DLT pipeline creates its own job cluster, a DLT task cannot reuse a cluster used by other tasks and other tasks cannot reuse DLT’s job cluster.

If for some reason, you cannot use the DLT for your ingestion pipeline, then you can achieve the same functionality with cluster reuse in Workflows. Keeping this scenario in mind, the next step is uses separate tasks to transform the data into Gold tables. Since we know the complexity of the transformations and compute requirements we have created a job cluster called “gold-transformation-cluster” and reused it across all the gold tasks.

We have tailored the cluster configuration to be specific to gold layers’s task requirements. In this case we are using r5d.4xlarge type VM and 4 workers and also use DBR 14.3 LTS with Photon acceleration. - Data Warehousing and BI: The DWH and BI Teams are leveraging DBSQL clusters to refresh their queries. With SQL Clusters, you can re-use the same warehouse. Here we are using Medium sized SQL Warehouse with 1-5 auto scaling across all the BI related tasks leveraging all the benefits of a SQL warehouse.

- Machine Learning and AI: ML Teams will very likely require different compute requirements for their responsibilities - both with respect to other Data teams as well as across their tasks. For example, some tasks within the ML pipeline are more GPU intensive than others. As such, we can define tailored clusters and re-use them depending on the task types. In this example we use DBR 14.3 LTS ML (includes Apache Spark 3.5.0, GPU, Scala 2.12), g4dn.xlarge type VM and 5 to 8 scaling cluster.

Above we showcased one way to utilize cluster reuse. As shown, it gives us flexibility to create a tailored cluster as per the needs of a group or all of the tasks.

You can configure tailored clusters and reuse them depending on task types like:

- Data Engineering Clusters: Specifically configured for data ingestion tasks, reducing cluster initialization time for each task.

- GPU Clusters: Tailored for ML tasks, efficiently utilizing GPU resources and decreasing the overall job latency.

When using the control flow “Run Job” as a task, you call another workflow from the current workflow job. In this case, cluster re-use cannot be leveraged from the parent workflow. The child workflow will have its own cluster.

Why use it?

Unlock the full potential of Databricks Workflows with the myriad of advantages offered by adopting cluster reuse:

- Reduce job execution times: Streamline your workflows by eliminating the cluster creation overhead, significantly shortening the time it takes for jobs to complete. This leads to faster data processing, enabling quicker insights and decision-making.

- Lower cluster costs: By sharing clusters, the overall number of clusters required is reduced, leading to lower cloud resource expenses. This cost-effectiveness becomes more pronounced for frequently running jobs.

- Tailored cluster configurations: Can create tailored clusters to be shared with tasks with similar resource needs.

- Enhance resource utilization for parallel tasks: Running multiple tasks concurrently on a single, appropriately sized cluster will maximize resource utilization, minimize idle time and optimize cloud spending.

- Improve cluster lifecycle management: Databricks Workflows automatically manages the lifecycle of clusters, including provisioning, scheduling, and monitoring. This simplifies cluster management and reduces the operational overhead.

When to use it?

Below are a few scenarios where cluster reuse will help improve cost and performance efficiency:

- Similar Type and Size of Workload: Tasks within a job, preferably, should have similar resource requirements to ensure optimal cluster utilization.

- Frequent Execution: Cluster reuse shines for jobs containing multiple tasks that run repeatedly, as it eliminates the constant creation and destruction of clusters.

- Moderate Isolation Needs: Strike the right balance between resource sharing and isolation. While cluster reuse promotes collaborative resource utilization, tasks should maintain a moderate level of isolation to prevent conflicts.

Alternative to Cluster reuse

Before the cluster reuse feature was introduced, the multi-task jobs used All-purpose clusters to achieve zero startup time for subsequent tasks. This introduced some challenges. The below table marks the challenges that are faced when using all-purpose clusters and demonstrates how re-using job clusters can resolve them.

|

|

Option 1 |

Option 2 |

|

Cost |

Higher |

Lower (Job cluster rate) |

|

Isolation from other tasks in job |

None |

No isolation for tasks on same cluster Note: With Unity Catalog, isolation within tasks can be achieved by setting the right security mode. |

|

Isolations from other jobs |

No |

Yes |

|

Note: You can use other orchestrators to run jobs in Databricks such as Azure Data Factory (ADF), Airflow, etc. but these tools cannot leverage the job cluster reuse feature. |

Employ Cluster Reuse

Using UI - Modifying tasks in an existing job

To modify tasks to employ cluster reuse in an existing Databricks Workflow pipeline, follow these steps:

- Open the job and select the task(s) you wish to amend

- Under “Compute”, select an existing job cluster. Select a task and choose an existing job cluster, if you already have one defined.

Using UI - Creating a new job cluster for re-use

- If you do not have an existing Job cluster defined, you can create a new one from the UI. To learn how to do this, follow the steps outlined in our previous blog: Creating your first pipeline - Creating your first task.

- First, when creating a new task or going to an existing one, then on the ‘Compute’ dropdown select ‘Add new classic job cluster’.

- Next fill in the details for the desired cluster configuration. This can be based on the requirements of your particular task/job or a general T-shirt sized cluster config can also be created.

- Choose the newly configured cluster config when adding a task to the job.

Programmatically

You can automate operations in Databricks accounts, workspaces, and related resources with the Databricks SDK for Python. The sample code below shows you how you can create a workflow and configure it to reuse a job cluster.

It configures two ingestion tasks, Ingestion_Silver and Ingestion_Gold, which reuse the same job cluster called ‘ingestion_job_cluster’. And two ML tasks, ML_Feature_Engineering and ML_Batch_Scoring, reuse another one called ‘ml_job_cluster’. Also notice that ingestion cluster is utilizing an existing policy and ML cluster defines its own custom configuration.

Code summary

|

Order |

Workflow Task |

Cluster |

Cluster Configuration & Policy |

|

1 |

Ingestion Silver |

Ingestion_Job_Cluster |

Existing cluster policy ID: E0631F5C0D0006D6 |

|

2 |

Ingestion Gold |

||

|

3 |

ML Feature Engineering |

ML_Job_Cluster |

Newly defined cluster Latest DBR Autoscale: 1-2 nodes |

|

4 |

ML Batch Scoring |

# Installed current latest version: 0.17.0

!pip install --upgrade databricks-sdk==0.17.0

# Import

from databricks.sdk import WorkspaceClient

from databricks.sdk.service.compute import ClusterSpec, AutoScale

from databricks.sdk.service.jobs import Task, NotebookTask, JobCluster, TaskDependency

# Create client

w = WorkspaceClient(

host='https://xxxxxxxxxx.cloud.databricks.com/',

token= 'dapixxxxxxxxxxxxxxxxxx')

# Notebook path

base_path = f'/Users/{w.current_user.me().user_name}'

ingestion_silver = f'{base_path}/Ingestion_Silver'

ingestion_gold = f'{base_path}/Ingestion_Gold'

ml_featurisation = f'{base_path}/ML_Featurisation'

ml_scoring = f'{base_path}/ML_Scoring'

# Cluster spec example using custom config

latest_runtime = w.clusters.select_spark_version(latest=True)

cluster_spec_custom = ClusterSpec(

node_type_id='i3.xlarge',

num_workers=2,

spark_version=latest_runtime,

autoscale=AutoScale(

min_workers=1,

max_workers=2

))

# Cluster spec example using cluster policy

cluster_spec_using_policy = ClusterSpec(

policy_id="E0631F5C0D0006D6",

apply_policy_default_values=True

)

# Create job

created_job = w.jobs.create(

name=f'product_recommendation_pipeline',

tasks=[

# Define all tasks

Task(description="Ingestion_Silver",

# Use job cluster using user defined key name

job_cluster_key="ingestion_job_cluster",

notebook_task=NotebookTask(notebook_path=ingestion_silver),

task_key="Silver",

timeout_seconds=0),

Task(description="Ingestion_Gold",

depends_on=[TaskDependency("Silver")],

# Reuse cluster by referring to the same cluster key

job_cluster_key="ingestion_job_cluster",

notebook_task=NotebookTask(notebook_path=ingestion_gold),

task_key="Gold",

timeout_seconds=0,

),

Task(description="ML_Feature_Engineering",

depends_on=[TaskDependency("Gold")],

# Job cluster for ML loads

job_cluster_key="ml_job_cluster",

notebook_task=NotebookTask(notebook_path=ml_featurisation),

task_key="ML_Featurisation",

timeout_seconds=0,

),

Task(description="ML_Batch_Scoring",

depends_on=[TaskDependency("ML_Featurisation")],

# Reuse ML job cluster

job_cluster_key="ml_job_cluster",

notebook_task=NotebookTask(notebook_path=ml_scoring),

task_key="ML_Scoring",

timeout_seconds=0,

)

],

# Optionally add multiple job cluster config to use in various tasks

job_clusters=[

JobCluster(

job_cluster_key="ingestion_job_cluster",

new_cluster=cluster_spec_using_policy

),

JobCluster(

job_cluster_key="ml_job_cluster",

new_cluster=cluster_spec_custom

)

])

Considerations

While cluster reuse is generally beneficial, there are some factors to consider:

- Across jobs: A job cluster cannot be reused across different jobs.

- DLT pipeline as task: A DLT pipeline as a task in a multi task job cannot reuse a job cluster. DLT will always spin up its own job cluster.

- Cluster State: Tasks running on different clusters in the same job are fully isolated from each other. This means tasks should not make any assumption about cluster state based on prior tasks.

- Libraries: It's important to mention that libraries cannot be specified in a shared job cluster configuration; instead, dependent libraries must be added in the task settings.

Conclusion

Cluster reuse within Databricks Workflows represents a significant stride towards optimizing resource utilization and curtailing cloud costs. By allowing multiple tasks within a job to share a single cluster, organizations can eliminate the overhead associated with spinning up new clusters for each task, thereby streamlining their data pipelines and accelerating job execution. This efficient allocation of resources not only leads to quicker insights and decision-making but also translates into tangible cost savings, especially for jobs that run frequently or require similar resource configurations.

By understanding when and how to employ cluster reuse—taking into account factors like workload similarity, execution frequency, and task isolation—teams can maximize the benefits while maintaining the integrity and performance of their data workflows. With right planning, cluster reuse can be a powerful lever in the pursuit of efficient and cost-effective data operations on the Databricks platform.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Related Content