Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Technical Blog

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Technical Blog

- MLOps Gym - Beginners Guide to MLflow

SepidehEb

New Contributor III

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

03-20-2024

07:18 AM

MLflow

MLflow stands out as the leading open source MLOps tool, and we strongly recommend its integration into your machine learning lifecycle. With its diverse components, MLflow significantly boosts productivity across various stages of your machine learning journey. The utilisation of MLflow is integral to many of the patterns we showcase in the MLOps Gym.

Built on top of OS MLflow, Databricks offers a managed MLflow service that focuses on enterprise reliability, security, and scalability. Among its many advantages, the managed version of MLflow natively integrates with Databricks Notebooks, making it simpler to kickstart your MLOps journey. For a detailed comparison between OS and Managed MLflow, refer to the Databricks Managed MLflow product page.

Although MLflow encompasses seven core components (Tracking, Model Registry, MLflow Deployment for LLMs, Evaluate, Prompt Engineering UI, Recipes, and Projects ) as of the current date (March 2024), our focus in this article will be on the initial five components. We chose these components because they are most effective in solving MLOps challenges for our customers with minimal friction. We will provide recommendations on when and how to leverage them effectively.

We have broken this guide to MLflow into two parts:

- Beginners’ guide to MLflow will cover MLflow essentials for all ML practitioners. In this article, we discuss Tracking and Model Registry components.

- Advanced MLflow will cover more nuanced topics such as MLflow Deployment for LLMs, Evaluate, and the Prompt Engineering UI.

Here are our suggested steps for getting started with MLflow. Remember that in the remainder of this article, “MLflow” refers to the Databricks-managed version of MLflow.

Use MLflow Tracking

Your entry point to the MLflow world is probably MLflow Tracking. Using this component, you can log every aspect of your machine learning experiment, such as source properties, parameters, metrics, tags, and artifacts. MLflow Tracking assists data scientists during training and evaluating models, obviating the need to manually record the parameters and results of every experiment and facilitating reproducibility.

MLflow Tracking comprises the following components:

- Tracking Server, which is an HTTP server hosted on Databricks Control Plane for tracking runs/ experiments.

- Backend Store, which is a SQL database hosted on Databricks Control Plane to store metadata for runs/experiments such as run id, start and end time, parameters, etc.

- Artifact store, which is where MLflow stores the actual artifacts of runs such as model weights, data files, etc. Databricks uses DBFS as its default artifact store for MLflow.

- Tracking UI and APIs, which allow you to interact with MLflow Tracking API through UI or API calls, respectively. The UI has tools for exploring metadata and artifacts and comparing performance metrics between runs.

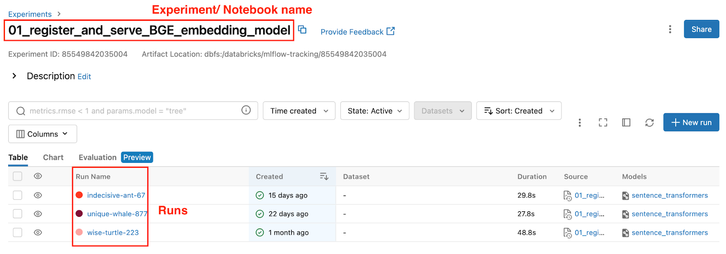

The key concepts in the MLflow Tracking realm are runs and experiments. Runs are a detailed record of individual executions of code. Each run captures the associated metadata and resulting output or “artifacts”. An experiment is a collection of runs related to a specific ML task that allows for easier comparison.

For example, for a single command execution or "run" of python train.py, MLflow Tracking would record the artifacts such as the model weights, images, etc.; as well as metadata such as the metrics, parameters, and start/end times. A collection of these runs with different variations (e.g. the ML algorithm) could be grouped as an experiment which allows you to more easily compare and select the best-fitting model.

For more information, you can refer to the MLflow documentation:

On Databricks, matters are made even simpler. By default, a notebook is linked to an experiment with the same name, and the runs of that experiment are all bundled together.

How do you interact with MLflow Tracking on Databricks?

MLflow Tracking tightly integrates with Databricks ecosystem. There are multiple touchpoints with MLflow Tracking on the Databricks platform, including ML Runtimes, Notebooks, and the Tracking UI (Experiments).

ML Runtime

MLflow is provided out-of-the-box with ML Runtimes. On Standard Runtimes, you can install MLflow using PyPi.

Notebooks

MLflow is natively integrated with Databricks Notebooks. That means two things:

- You can import MLflow and call its various methods using your API of choice (Python, REST, R API, and Java API). The managed Tracking Server will capture and log whatever you ask it to such as parameters, metrics, artifacts, etc. MLflow provides these capabilities out of the box without you needing to set up any infrastructure or tweak any knobs to get this functionality. Additionally, each run preserves the version of the notebook associated with it, making it completely reproducible.

- The MLflow Run Sidebar, located on the right-hand side of your Notebooks, lets you monitor runs directly within your notebooks. It enables you to capture a snapshot of each run, ensuring that you can revisit earlier versions of your code at any time.

Autologging

As of ML Runtime 10.3, Autologging is available on all Databricks notebooks. Autologging means you don’t need to import and call MLflow explicitly to capture your experiments. All you need to do is write your training code using a variety of supported ML libraries (e.g., sklearn), and pretty much every aspect of the experiment, including metrics, parameters, model signature, artifacts, and dataset, will be logged automatically.

When you attach your notebook to an ML Runtime, Databricks Autologging calls mlflow.autolog() which then uses a predefined set of parameters, metrics, artifacts, etc. to log your experiments. If you want to log any other aspect of your experiment not covered by Autologging (e.g., SHAPLY values), you can manually log them using the MLflow client.

One important point to note is that when creating a run using the MLflow fluent API, autologging isn’t applied automatically. You will have to call mlflow.autolog() manually within the “with” block for it to take effect.

with mlflow.start_run() as run:

mlflow.autolog()

....

Tracking UI

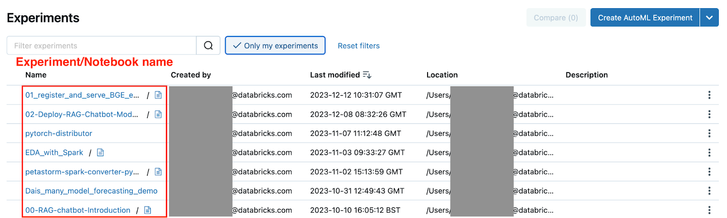

Everything you capture in your notebooks using MLflow Tracking is visible under the Experiments tab in Databricks. By default, there is a 1:1 mapping between notebooks and experiments. Therefore in the Tracking UI, you can find all the notebook runs under one experiment. If you click on an experiment, you can further explore the experiment's runs and drill down on relevant information about that experiment.

How can I start using MLflow Tracking?

Now that you know the essentials of MLflow Tracking, open a notebook and begin writing your ML code. Autologging will automatically capture the training run metadata for you. Navigate to the Experiments tab and look for the experiment with the same name as your notebook. Analyse the run metrics, parameters, etc., embedded within that experiment.

Use MLflow Model Registry with Unity Catalog

Another critical component of MLflow is the Model Registry. It provides a centralised repository for managing and organising machine learning models. You can think of Model Registry as a one-stop shop for all the models you want to “keep” for deployment, testing, or any other purposes. Models that are “logged” with MLflow Tracking can be “registered” to the Model Registry.

What is a model flavour?

A model flavour refers to how a machine learning model is packaged and stored. When registering a model, you can register it with a “native” flavour such as sklearn, TensorFlow, etc., or with a Python flavour known as “pyfunc.” A native flavour saves the model as a native object (e.g., a pickle file for sklearn flavour). In contrast, the pyfunc flavour stores the models as a generic filesystem format defined and supported by MLflow.

If you register a model with a pyfunc flavour, you can only load and use it as a Python object. However, suppose you register a model with a native flavour, Databricks will automatically create an additional Python flavour for you, enabling you to load and use the model both as a native object or a Python one.

Workspace Model Registry vs Unity Catalog Model Registry

Before the introduction of Models in Unity Catalog (UC), the default scope for the Model Registry home was limited to a workspace. This meant you could only access a model registered to your Model Registry within the boundaries of that specific workspace. If you wanted to move models from one workspace to another (e.g., to promote from a dev workspace to a prod one), you had to go through the extra trouble of using an open source tool to move objects between workspaces. Another option was to set up a remote Model Registry and share it between workspaces, which was also laboursome.

Models in UC solve this problem and much more. Now, models live in UC alongside your features and raw data, making lineage tracking seamless. UC takes the concept of Model Registry outside the boundaries of a workspace and holds it at an account level. You can register your models in a schema of a catalog, easily accessed between different workspaces or moved to other catalogs/schemas.

One major change when moving from workspace Model Registry to UC is using “aliases” over “stages” to promote models from development to production. When working with the workspace Model Registry, you would use MLflow model stages (None, Staging, Production, Archived) to progress models from the development stage (typically None) to testing (Staging) and then to production (Production). Once done with a model, you would move it to the “Archived” stage. However, in the UC world, the concept of stages is replaced by model aliases. You have aliases such as “Challenger” and “Champion” for models to be tested and in production, respectively.

How can I start using the MLflow Model Registry?

You can use mlflow.register_model() to register any model you have logged during a run. Another option is to pass a “registered_model_name” argument when you are logging your model with mlflow.<MODEL_FLAVOR>.log_model(), and it will both log and register the model at the same time.

To use UC as your model registry, all you need to do is:

import mlflow

mlflow.set_registry_uri("databricks-uc")

Then use a 3-level namespace for your model names following the <CATALOG>.<SCHEMA>.<MODEL_NAME> pattern.

End-to-end ML Experience with MLflow (Beginners version)

Let’s look at a step-by-step experience using MLflow from experimentation through registering a model to Model Registry.

- Create a notebook and write your ML code in it (e.g. sklearn)

- Check the Experiments tab and explore your results by navigating to the experiment with the same name as your notebook. If you have multiple runs within the same experiment, you can select and compare their different metrics in the UI.

- Decide which model is the “winner.” Then, use mlflow.register_model() to register it to the Model Registry.

- Load the model in a notebook using mlflow.<MODEL_FLAVOR>.load_model() and run inference.

Coming up next!

Next blog in this series: MLOps Gym - Unity Catalog Setup for MLOps

2 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Related Content