Databrick's curriculum team solved this problem by creating our own Maven repo and it's easier than it sounds. To do this, we took an S3 bucket, converted it to a public website, allowing for standard file downloads, and then within that bucket created a "repo" folder. From there all we had to do was follow the standard maven directory convention to create a repo with only 1-2 files.

With your repo in place, you simply create your cluster and when defining the maven library, include the extra attribute to identify your custom repo.

You can use the standard versioning scheme if you wanted to, or simply replace the same file every time you update it. In any event, your manually created cluster, or a cluster created through the APIs will install your library at startup.

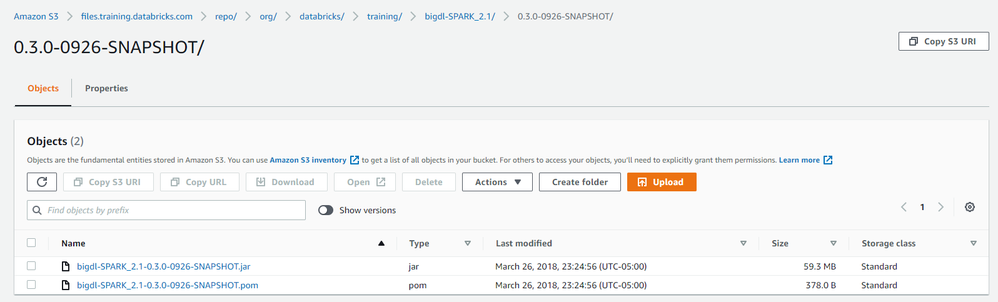

You can see here how we used S3 to create our repo and host this custom copy of bigdl