Hello,

I am trying to run some geospatial transformations in Delta Live Table, using Apache Sedona.

I tried defining a minimal example pipeline demonstrating the problem I encounter.

First cell of my Notebook, I install apache-sedona Python package:

%pip install apache-sedona

then I only use SedonaRegistrator.registerAll (to enable geospatial processing in SQL) and return an empty dataframe (that code is not reached anyway):

import dlt

from pyspark.sql import SparkSession

from sedona.register import SedonaRegistrator

@dlt.table(comment="Test temporary table", temporary=True)

def my_temp_table():

SedonaRegistrator.registerAll(spark)

return spark.createDataFrame(data=[], schema=StructType([]))

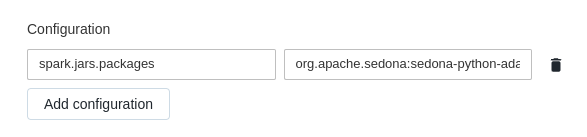

I created the DLT Pipeline leaving everything as default, except for the spark configuration:

Here is the uncut value of "spark.jars.packages": "org.apache.sedona:sedona-python-adapter-3.0_2.12:1.2.0-incubating,org.datasyslab:geotools-wrapper:1.1.0-25.2". This is required according to this documentation.

Here is the uncut value of "spark.jars.packages": "org.apache.sedona:sedona-python-adapter-3.0_2.12:1.2.0-incubating,org.datasyslab:geotools-wrapper:1.1.0-25.2". This is required according to this documentation.

When I run the Pipeline, I get the following error:

py4j.Py4JException: An exception was raised by the Python Proxy. Return Message: Traceback (most recent call last):

File "/databricks/spark/python/lib/py4j-0.10.9.1-src.zip/py4j/java_gateway.py", line 2442, in _call_proxy

return_value = getattr(self.pool[obj_id], method)(*params)

File "/databricks/spark/python/dlt/helpers.py", line 22, in call

res = self.func()

File "<command--1>", line 8, in my_temp_table

File "/local_disk0/.ephemeral_nfs/envs/pythonEnv-0ecd1771-412a-4887-9fc3-44233ebe4058/lib/python3.8/site-packages/sedona/register/geo_registrator.py", line 43, in registerAll

cls.register(spark)

File "/local_disk0/.ephemeral_nfs/envs/pythonEnv-0ecd1771-412a-4887-9fc3-44233ebe4058/lib/python3.8/site-packages/sedona/register/geo_registrator.py", line 48, in register

return spark._jvm.SedonaSQLRegistrator.registerAll(spark._jsparkSession)

TypeError: 'JavaPackage' object is not callable

I can reproduce this error by running spark on my computer and avoiding installing the packages specified in "spark.jars.packages" above.

I guess that this DLT Pipeline is not correctly configured to install Apache Sedona. I could not find any documentation describing how to install Sedona or other packages on a DLT Pipeline.

Does anyone know how/if it is possible to do it?

Have a nice day.

Edit:

What I also tried so far, without success:

- using an init script -> not supported in DLT

- using a jar library -> not supported in DLT

- using a maven library -> not supported in DLT