Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Kafka unable to read client.keystore.jks.

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Kafka unable to read client.keystore.jks.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-07-2022 07:04 PM

Below is the error we have received when trying to read the stream

Caused by: kafkashaded.org.apache.kafka.common.KafkaException: Failed to load SSL keystore /dbfs/FileStore/Certs/client.keystore.jks

Caused by: java.nio.file.NoSuchFileException: /dbfs/FileStore/Certs/client.keyst

When trying to read a stream from Kafka, databricks is unable to find keystore files.

df = spark.readStream \

.format("kafka") \

.option("kafka.bootstrap.servers","kafka server with port") \

.option("kafka.security.protocol", "SSL") \

.option("kafka.ssl.truststore.location",'/dbfs/FileStore/Certs/client.truststore.jks' ) \

.option("kafka.ssl.keystore.location", '/dbfs/FileStore/Certs/client.keystore.jks') \

.option("kafka.ssl.keystore.password", keystore_pass) \

.option("kafka.ssl.truststore.password", truststore_pass) \

.option("kafka.ssl.keystore.type", "JKS") \

.option("kafka.ssl.truststore.type", "JKS") \

.option("subscribe","sports") \

.option("startingOffsets", "earliest") \

.load()

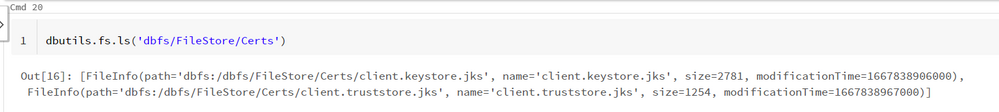

The file exists in the dbfs and also able to read the file.

10 REPLIES 10

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-08-2022 11:11 PM

Hi @Jayanth Goulla , Does this works: kafka.ssl.keystore.type = PEM ?

Reference: https://docs.databricks.com/structured-streaming/kafka.html#use-ssl

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-09-2022 11:21 AM

Hi @Debayan Mukherjee , Please see the results after using PEM as the keystore type.

Caused by: kafkashaded.org.apache.kafka.common.errors.InvalidConfigurationException: SSL key store password cannot be specified with PEM format, only key password may be specified

I have use the document posted in the chat to get this working.

Also if I use SASL_SSL as protocol I get the below error

Caused by: java.lang.IllegalArgumentException: Could not find a 'KafkaClient' entry in the JAAS configuration. System property 'java.security.auth.login.config' is not set

The files are present in the dbfs

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-11-2022 10:01 AM

You’ll have to construct JAAS file and pass with JVM option. Or, you can pass the content of JAAS as Kafka source option, say, dynamic JAAS config.https://cwiki.apache.org/confluence/display/KAFKA/KIP-85%3A+Dynamic+JAAS+configuration+for+Kafka+cli...

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2022 06:09 AM

Do I need to use JAAS even if I already have certificates for SSL connection?

I am only looking to establish SSL connection and not SASL.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-09-2022 04:27 AM

Hi @Jayanth Goulla , We haven’t heard from you on the last response from @Debayan Mukherjee, and I was checking back to see if his suggestions helped you.

Or else, If you have any solution, please do share that with the community as it can be helpful to others.

Also, Please don't forget to click on the "Select As Best" button whenever the information provided helps resolve your question.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-16-2022 01:46 AM

Hi @Debayan Mukherjee , This worked after using the absolute path

/dbfs/dbfs/FileStore/Certs/client.truststore.jks instead of just dbfs/FileStore/Certs/client.truststore.jks.

However, I need this to be working for a ADLS gen2 path.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-18-2023 09:15 AM

@Jayanth746did you have any luck with this eventually? Hitting the same issue - appears that spark isn't able to read from adls directly, but the docs are vague as to whether it should be possible. Looks like will probably have to copy them to a local path first.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-18-2023 09:32 AM

Hi @mwoods , I was unable to refer to ADLS path directly.

This is what I have done to get this working

val keystore_location = adls_path + "/" + operator + "/certs/client.keystore.jks"

val dbfs_ks_location = "dbfs:/FileStore/"+ operator +"/Certs/client.keystore.jks"

dbutils.fs.cp(keystore_location,dbfs_ks_location)

.option("kafka.ssl.keystore.location","/"+dbfs_ks_location.replace(":",""))

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-22-2023 07:24 AM

@Jayanth746- FYI, as of today, reading the keystore/truststore from abfss paths directly is now working for me, so may be worth a retry on your end.

Not sure whether it was fixed on the DataBricks side, or if it was down to a change of setup on my side. If you find it still doesn't work for you, assuming you have used an external location to access, double-check that the principal/grant mapping in there is correct.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-22-2023 12:13 PM

Ok, scrub that - the problem in my case was that I was using the 14.0 databricks runtime, which appears to have a bug relating to abfss paths here. Switching back to the 13.3 LTS release resolved it for me. So if you're in the same boat finding abfss paths in kafka.ssl.keystore.location and kafka.ssl.truststore.location are failing, try switching back to 13.3 LTS.

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Unity Catalog Enabled Clusters using PrivateNIC in Administration & Architecture

- DLT Pipeline Error Handling in Data Engineering

- Pulsar Streaming (Read) - Benchmarking Information in Data Engineering

- keyrings.google-artifactregistry-auth fails to install backend on runtimes > 10.4 in Administration & Architecture

- Unable to create a record_id column via DLT - Autoloader in Data Engineering