Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- slow Fetching results by client in databricks SQL ...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-06-2023 03:45 AM

I'm using `databricks-sql-connector` in python3.8 to connect to an Azure SQL Wharehouse inside an Azure Machine Learning Compute Instance.

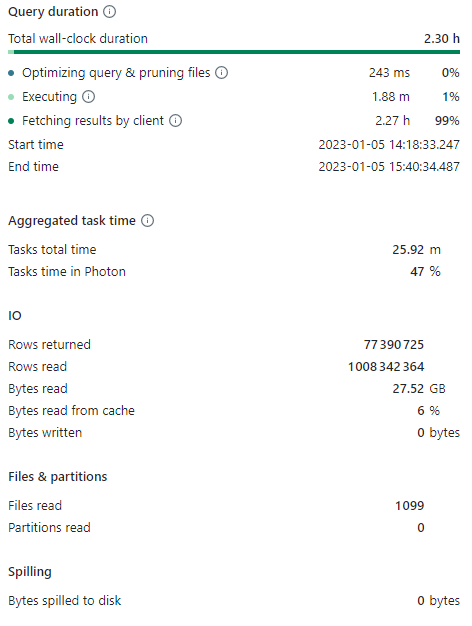

I have this large result query, looking at the `query history` I check the time spent on doing the query, and sending the data to the client. The result is 27Gb big. It took over 2 hours to get this data on my Azure Compute Instance.

Here you can see it actually took 1.88m to "make" the data, and 2.27 hours to send the data.

When I redo this query but inside SQL editor in databricks (and removing the 1000 limit), the `Fetching results by client` is way faster. Because it's in local I guess, which is normal.

So I am assuming that the network is in cause here. But since Databricks and my compute instance are in the same subnet/network, I don't understand why the download is so slow.

I have an other hypothesis, it's that the python code freeze, because after running for an hour, using `free -m` in linux shows me that the data is in memory, but when I do CTRL+C on the python code, it wont stop the process and nothing happens. I have to do CTRL+Z to stop the process, but this creates memory leak because checker back `free -m` the memory hasn't decresed.

Any idea if databricks-sql-connector has issues when fetching "large" results?

Labels:

- Labels:

-

AML

-

Azure

-

AzureSQLWarehouse

-

SQL

-

Sql Wharehouse

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-16-2023 06:48 AM

So I made some few tests. Since you said that the Databricks SQL driver wasn't made to retrieve that amount of data. I went on Spark.

I fired up a small spark cluster, the query was as fast as on SQL Warehouse, then I did a df.write.parquet("/my_path/") This took 10 minutes (2GiB of parquet files)

Then I used Azure Storage API to download all parquet files from the folder on Azure Storage. And load it with Pandas.

The original techno I was using was Impala and did this in 30 minutes. SQL Warehouse is 4 hours. With Spark + some spark.write + file download to local/VM/pod + load to pandas is 20 minutes.

4 REPLIES 4

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-06-2023 03:54 AM

Are you using fetchall?

"Returns all (or all remaining) rows of the query as a Python list of Row objects."

I bet that 30GB list will be killer anyway.

Please use cursor.fetchmany(10000) and append in chunks to your destination (dataframe or what you are using). Additionally, in that approach, you can monitor the progress of your code.

(for the test, just print the number and time needed for each chunk)

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-06-2023 08:30 AM

Yes I was using fetchall()

Here is what I get when I use fetchmany(100000) (not 10.000, but 100.000) else there was too much printing:

<databricks.sql.client.Cursor object at 0x7fddfd233be0>

Fetching chunk of size 100000, 1

1.2840301990509033

Fetching chunk of size 100000, 2

1.7594795227050781

Fetching chunk of size 100000, 3

1.4387767314910889

Fetching chunk of size 100000, 4

1.9465265274047852

Fetching chunk of size 100000, 5

1.284682273864746

Fetching chunk of size 100000, 6

1.8754642009735107

Fetching chunk of size 100000, 7So it takes around 1.5-2 secondes per chunk of size 100.000. From what I understand in the documentation, the chunk size is the number of rows fetched.

Since I have 1008342364 rows, it will take me 10084 fetchmany(100000)

10084 * 1.5 (seconds per fetchmany) = 15125 total seconds to get all the data. so approx 4 hours.

If a do a fetchmany(1000000), i get one fetch of 14 seconds.

1008342364 rows / 1000000 = 1009 fetches

10084 * 14 = 14126 secondes for all fetchs => 3.9 hours

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-16-2023 06:48 AM

So I made some few tests. Since you said that the Databricks SQL driver wasn't made to retrieve that amount of data. I went on Spark.

I fired up a small spark cluster, the query was as fast as on SQL Warehouse, then I did a df.write.parquet("/my_path/") This took 10 minutes (2GiB of parquet files)

Then I used Azure Storage API to download all parquet files from the folder on Azure Storage. And load it with Pandas.

The original techno I was using was Impala and did this in 30 minutes. SQL Warehouse is 4 hours. With Spark + some spark.write + file download to local/VM/pod + load to pandas is 20 minutes.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-16-2023 07:34 AM

Hi @Antoine Tavernier, Do you still need help or is your query resolved?

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Unity Catalog - Created UC and linked it to my DEV storage account for the entire org in Administration & Architecture

- Grant Unity Catalog Access without Workspace Access in Warehousing & Analytics

- Databricks REST API to fetch mount points in Administration & Architecture

- connect timed out error - Connecting to SQL Server from Databricks in Data Engineering

- Can I see queries sent to All Purpose Compute from Power BI? in Data Engineering