Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- FileNotFoundError: [Errno 2] No such file or direc...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-27-2021 08:14 AM

I am new to learning Spark and working on some practice; I have uploaded a zip file in DBFS /FileStore/tables directory and trying to run a python code to unzip the file; The python code is as:

from zipfile import *

with ZipFile("/FileStore/tables/flight_data.zip", "r") as zipObj:

zipObj.extractall()

It throws an error:

FileNotFoundError: [Errno 2] No such file or directory: '/FileStore/tables/flight_data.zip'

When i check manually and also through the code dbutils.fs.ls("/FileStore/tables/") it returns

Out[13]: [ FileInfo(path='dbfs:/FileStore/tables/flight_data.zip', name='flight_data.zip', size=59082358)]

Can someone please review and advise; I am using community edition to run this on cluster with configuration:

Data Bricks Runtime Version 8.3 (includes Apache Spark 3.1.1, Scala 2.12)

Labels:

- Labels:

-

DBFS

-

File

-

Filestore

-

Python Code

-

Tables Directory

-

Zip

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-20-2021 10:20 AM

Hi @Goutam Pal , Can you please tell me the Databricks Runtime Version on which you're trying this?

On community edition, in DBR 7+, this mount is disabled.

Please try the same on any DBR version less than 7. It'll work.

Thanks.

17 REPLIES 17

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-27-2021 09:40 AM

Hi @ Data_Engineer_241188! My name is Kaniz, and I'm the technical moderator here. Great to meet you, and thanks for your question! Let's see if your peers in the community have an answer to your question first. Or else I will get back to you soon. Thanks.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-27-2021 10:16 AM

It is on dbfs mount so in most scenarios you should prefix everything with /dbfs (or dbfs:/ in databricks native functions, in many is not even needed as they handle only dbfs like dbutils). So please try:

from zipfile import *

with ZipFile("/dbfs/FileStore/tables/flight_data.zip", "r") as zipObj:

zipObj.extractall()Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-27-2021 10:29 AM

@Hubert Dudek

Hello Sir,

I tried in the way you suggested as well.However no luck! Still gives the same error.

Thank you,

Goutam

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-27-2021 10:40 AM

Do you have maybe high-concurrency server or some limited trial version (trial/free can make problem with reading with not native libraries).

Try also to explorer filesystem using shell commands by putting magic %sh in the first line in notebook to see is there /dbfs folder

%sh

ls /Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-27-2021 10:48 AM

@Hubert Dudek

Hi Sir,

Working in community edition; Tried with magic commands as well.No luck! It says the command is not recognized.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-28-2021 01:50 AM

so it seems that in community edition you can not direct access filesystem. You have access only to dbfs storage but you need to load there uncompressed object. So you need everywhere to prefix with dbfs:/ if it is not work for some function it will not work. As a last chance you can give a try like that:

from zipfile import *

with ZipFile("dbfs:/FileStore/tables/flight_data.zip", "r") as zipObj:

zipObj.extractall()Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-28-2021 03:20 AM

@Hubert Dudek

Hi Sir, No luck with this way also. :(.

Thank you for all the great suggestions though.😊

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

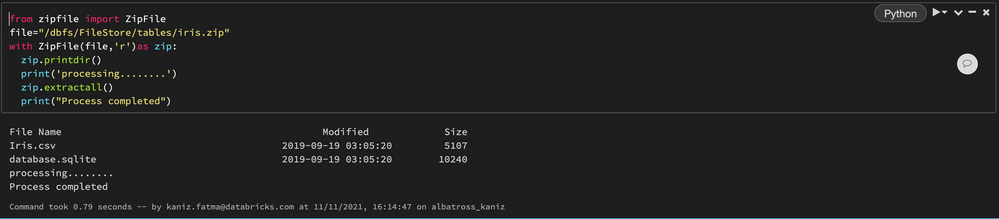

11-11-2021 02:47 AM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-18-2021 09:55 AM

Hi @Goutam Pal ,

Are you still having this issue? I think @Kaniz Fatma example will work great to solve your issue.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-18-2021 07:10 PM

@Jose Gonzalez @Kaniz Fatma : The issue still persists. Please find attached the screenshot of the error.

Thanks,

Goutam Pal

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-19-2021 08:47 AM

@Goutam Pal - Thank you for letting us know. I apologize about the inconvenience.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-20-2021 10:20 AM

Hi @Goutam Pal , Can you please tell me the Databricks Runtime Version on which you're trying this?

On community edition, in DBR 7+, this mount is disabled.

Please try the same on any DBR version less than 7. It'll work.

Thanks.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-03-2021 10:51 AM

Hello Kaniz..Will try and revert you back.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-06-2021 06:03 AM

Hi @Goutam Pal , Sure. Please let us know if it worked for you.

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Databricks SQL connectivity in Python with Service Principals in Administration & Architecture

- Native service principal support in JDBC/ODBC drivers in Administration & Architecture

- Databricks connecting SQL Azure DW - Confused between Polybase and Copy Into in Data Engineering

- DBEAVER Connection to Sql Warehouse in Databricks in Data Engineering

- SQL Warehouse: Retrieving SQL ARRAY Type via JDBC driver in Data Engineering