Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Databricks Job: Package Name and EntryPoint parame...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-01-2022 08:53 AM

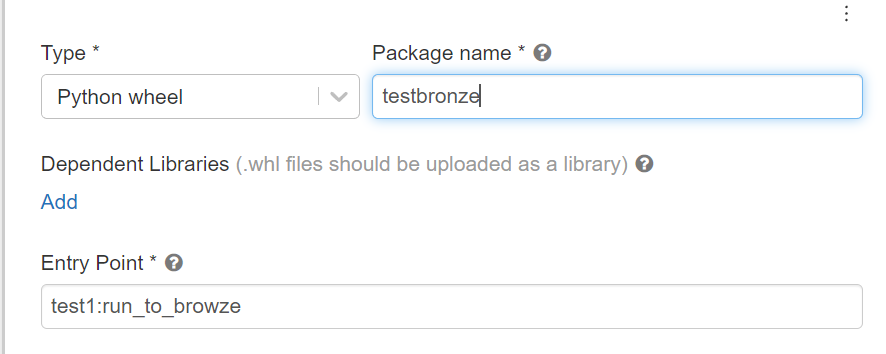

I have created Python wheel file with simple file structure and uploaded into cluster library and was able to run the packages in Notebook but, when I am trying to create a Job using python wheel and provide the package name and run the task it fails with Wheel Name not found or package not found.

Can you help us with exact parameters need to be pass into package Name and entrypoint. I followed doc but with no success.

File structure:

| - PythonWheel

| - src

| - __init__.py

| - test1.py

| - test2.py

| - setup.py

Setup.py:

from setuptools import find_packages, setup

setup(

name="testbronze",

packages = find_packages(),

setup_requires=["wheel"],

description="demo",

version="0.0.1",

include_package_data=True,

)

Job PackageName:

testbronze (Note: also tried with "src")

Labels:

- Labels:

-

Databricks Job

-

Package

-

Python Wheel

-

Wheel Name

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-03-2022 11:21 AM

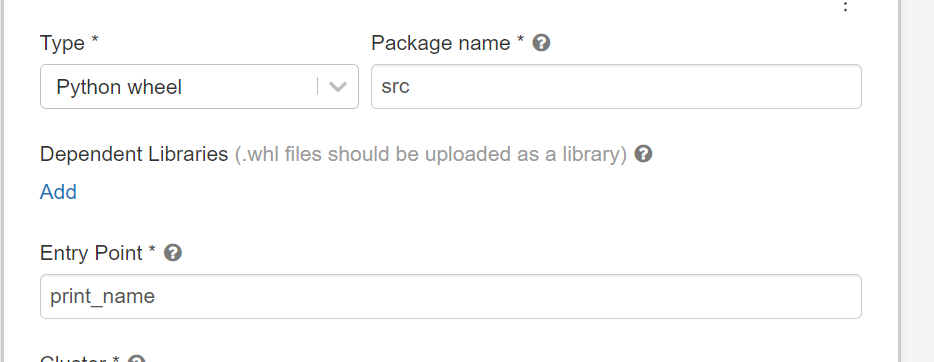

I have resolved the Issue.

Problem was in Setup.py

In the name parameter I need to provide the proper structure of my package

Note: If your package structure is src.bronze you need to name it exactly.

setup(

name="src",

packages = ['.src'],

description="demo",

version="0.1",

author="Sandeep",

long_description=long_description,

long_description_content_type="text/markdown",

license='LICENSE.txt',

include_package_data=True,

)

And from the Databricks UI:

PackageName: src

Entry_Point : print_name (Function)

5 REPLIES 5

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-01-2022 11:27 AM

Advancing analytics explain wheel in that highly recommended video https://www.youtube.com/watch?v=nN-NPnfJLNY That video also explaining how to use files in repos which is more better solution than wheel package (as wheel have to be installed on server every time, files in repos can just stay in your git repo)

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-01-2022 02:11 PM

Thank you for your reply @Hubert Dudek

But, based on my use case I cannot use Notebook to run the Job.

We need to orchestrate directly through task using python wheel option.

I found many docs pointing to run through Notebook but no documents refer directly to trigger task using wheel file.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-03-2022 11:21 AM

I have resolved the Issue.

Problem was in Setup.py

In the name parameter I need to provide the proper structure of my package

Note: If your package structure is src.bronze you need to name it exactly.

setup(

name="src",

packages = ['.src'],

description="demo",

version="0.1",

author="Sandeep",

long_description=long_description,

long_description_content_type="text/markdown",

license='LICENSE.txt',

include_package_data=True,

)

And from the Databricks UI:

PackageName: src

Entry_Point : print_name (Function)

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-06-2022 02:13 PM

@Sandeep Toopran - Thank you for letting us know how you solved the problem!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-06-2024 09:00 AM

There you can see a complete template project with (the new!!!) Databricks Asset Bundles tool and a python wheel task. Please, follow the instructions for deployment.

Project Template for a CI/CD Pipeline with a PySpark/Databricks - andre-salvati/databricks-template

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Native service principal support in JDBC/ODBC drivers in Administration & Architecture

- Call databricks notebook from azure flask app in Data Engineering

- Passing Parameters from Azure Synapse in Data Engineering

- Variables passed from ADF to Databricks Notebook Try-Catch are not accessible in Data Engineering

- Errors When Using R on Unity Catalog Clusters in Data Engineering