# Databricks notebook source

.libPaths()

# COMMAND ----------

dir("/databricks/spark/R/lib")

# COMMAND ----------

## Add current working directory to library paths

.libPaths(c(getwd(), .libPaths()))

# COMMAND ----------

## The latest versions from CRAN

install.packages(c('arrow', 'tidyverse', 'aws.s3', 'sparklyr', 'cluster', 'sqldf', 'lubridate', 'ChannelAttribution'), repos = "http://cran.us.r-project.org")

# COMMAND ----------

dir("/tmp/Rserv/conn970")

# COMMAND ----------

## Copy from driver to DBFS

system("cp -R /tmp/Rserv/conn970 /usr/lib/R/site-library")

# COMMAND ----------

dir("/usr/lib/R/site-library")

# COMMAND ----------

## Copy from driver to DBFS

system("cp -R /tmp/Rserv/conn970 /dbfs/r-libraries")

# COMMAND ----------

dir("/dbfs/r-libraries")

# COMMAND ----------

# Add packages to libPaths

.libPaths("/dbfs/r-libraries")

# COMMAND ----------

# Check that the dbfs libraries are in libPath

.libPaths()

About 6 weeks ago (early April 2022), I had tested a workflow to ensure that I could trigger jobs on databricks remotely from Airflow, which was successful.

As part of the process the workflow activates a pre-built compute, it then loads various R libraries from DBFS into the compute, one of the packages is 'arrow', however while all the other packages load without issue this package fails to load successfully and then causes my workflow to crash.

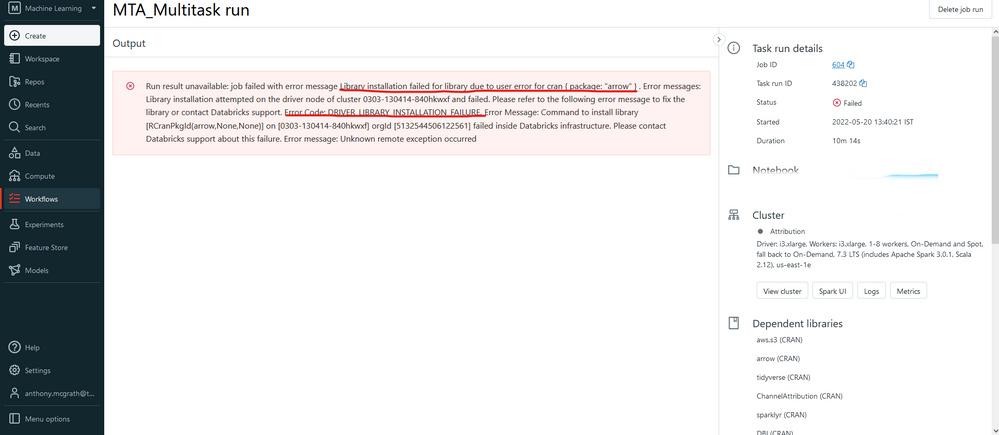

When I look into the workflow I get the following error 'DRIVER_LIBRARY_INSTALLATION_FAILURE. Error Message: Command to install library [RCranPkgId(arrow,None,None)] on [0303-130414-840hkwxf] orgId [5132544506122561] failed inside Databricks infrastructure', see image below.  I have triggered workflow directly inside of databricks and still get the same problem, so clearly it does not have an airflow related cause.

I have triggered workflow directly inside of databricks and still get the same problem, so clearly it does not have an airflow related cause.

I tried to delete the arrow package from dbfs to see if I could run the test without it, but everytime I delete it, it returns when I retry the workflow.

I then checked CRAN to see if arrow had been updated recently, it was on the 2022-05-09, so I loaded an older version instead, (having first deleted everything relating to it from dbfs), this didn't work either, see images attached.

I have also attached the script I'm using in R to load the packages to dbfs, which I think is good as every other package load properly, however it may be of use in understanding what I am doing or why the error occurs.

What I'd like to know is:

- Do you see anything that I might be doing incorrectly inside my attached libraries script?

- Is there an issue with loading the arrow package and if so do you have a work around that can prevent the failure?

- Why does dbfs continue to re-install arrow despite my removal of it from the directory?

- Can I permanently remove the arrow package from dbfs without it returning everytime I trigger the workflow?

Many thanks in advance for any help you guys can offer.