Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Machine Learning

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Machine Learning

- Feature table: merge very slow

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Feature table: merge very slow

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-05-2022 10:09 PM

Hi All,

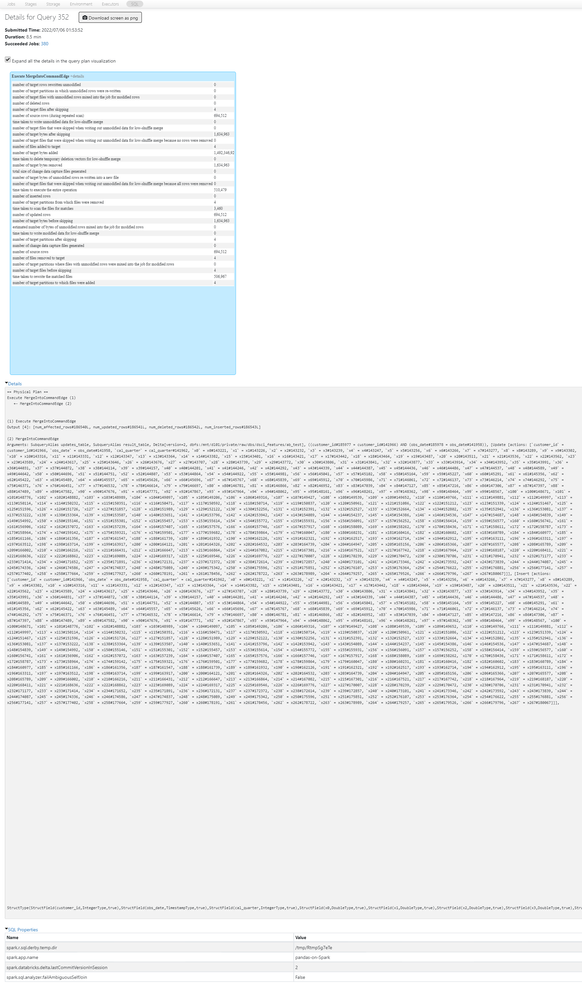

We're just started to look at the feature store capabilities of Databricks. Our first attempt to create a feature table has resulted in very slow write. To avoid the time incurred by the feature functions I generated a dataframe with same key's but the feature values where generated from rand(). It is <700K rows and ~280 feature columns. It doesn't seem to matter whether we use merge mode or overwrite, it is very slow (8.5mins on standalone 4 core cluster). I've attached the SQL detail from Sparq UI. It is taking on 3.5s to scan the files for matches and nearly 8.5mins to rewrite the matched files. While it appears to 1.5GB of output that seems an incredibly long time to rewrite the changes. I've tried with and without partitioning, repartitioning. Can anyone offer some insights or suggestions to improve the performance of the feature/delta table writes?

Regards,

Ashley

Labels:

- Labels:

-

Df

-

Feature

-

Feature Table

-

Merge Into

-

Performance

-

SQL

5 REPLIES 5

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-06-2022 05:50 AM

Do you merge on 280 columns? Cannot it be just by id only to simplify?

I would merge per id and optimize and zorder if possible by id before the merge.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-06-2022 04:58 PM

I'm sure the merge is hinged on the primary key declared when the feature table is created. For this experiment I wrote the feature table first with only primary keys and then later added the features (~280), joined to the original primary key only dataframe, and wrote that using:

fs.write_table(

name='dsci_features.ab_test',

df=to_write_df,

mode='merge'

)

The primary key are in the order they were originally written, they're also in order (customer id and observation date).

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-06-2022 05:18 PM

Here's how the table was created:

fs.create_table(

name='dsci_features.ab_test',

primary_keys=['customer_id', 'obs_date'],

# If df is provided, this data will be saved in a Delta table

#df=feature_df,

# If schema is provided, no data will be saved yet

schema=base_df.schema,

#partition_columns=['cal_quarter'],

description='AB Test'

)

primary keys written:

fs.write_table(

name='dsci_features.ab_test',

df=base_df,

mode='merge'

)

features written:

fs.write_table(

name='dsci_features.ab_test',

df=base_df,

mode='merge'

)

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-06-2022 05:22 PM

If I just insert the features into the newly created feature table, without priming the table with primary keys, effectively an append, it takes around 5mins. The insert of primary keys in the experiment was to force a merge. 5 minutes for the insert only path seems a bit excessive as well.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-31-2022 11:43 PM

Hi @Ashley Betts

Hope all is well! Just wanted to check in if you were able to resolve your issue and would you be happy to share the solution or mark an answer as best? Else please let us know if you need more help.

We'd love to hear from you.

Thanks!

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Programmatically Update Table / Columns Descriptions in Unity Catalog Without Altering Table in Data Governance

- Databricks OutOfMemory error on code that previously worked without issue in Data Engineering

- Cached Views in MERGE INTO operation in Data Engineering

- Ingesting Files - Same file name, modified content in Data Engineering

- Not able to create table shallow clone on DBR 15.0 in Data Engineering