Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Orphan (?) files on Databricks S3 bucket

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-04-2022 11:27 AM

Hi, I'm seeing a lot of empty (and not) directories on routes like:

xxxxxx.jobs/FileStore/job-actionstats/

xxxxxx.jobs/FileStore/job-result/

xxxxxx.jobs/command-results/

Can I create a lifecycle to delete old objects (files/directories)? how many days? what is the best practice for this case?

Are there other directories that need a lifecycle configuration?

Thanks!

Labels:

- Labels:

-

Best practice

-

Files

-

Filestore

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-18-2022 07:10 AM

During office hours, I asked one year ago to add purge cluster logs to API, and it was not even considered. I thought to set selenium to do that.

You can limit logging for the cluster by playing with log4j, for example, to put something like .sh script below on dbfs as the start script for the cluster (you need additionally specify log properties to be adjusted for drivers and executors):

#!/bin/bash

echo "Executing on Driver: $DB_IS_DRIVER"

if [[ $DB_IS_DRIVER = "TRUE" ]]; then

LOG4J_PATH="/home/ubuntu/databricks/spark/dbconf/log4j/driver/log4j.properties"

else

LOG4J_PATH="/home/ubuntu/databricks/spark/dbconf/log4j/executor/log4j.properties"

fi

echo "Adjusting log4j.properties here: ${LOG4J_PATH}"

echo "log4j.<custom-prop>=<value>" >> ${LOG4J_PATH}In the notebook, you can disable logging by using:

sc.setLogLevel("OFF");Additionally, for cluster config, you can set for delta files:

spark.databricks.delta.logRetentionDuration 3 days

spark.databricks.delta.deletedFileRetentionDuration 3 days

4 REPLIES 4

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-13-2022 08:16 AM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-13-2022 02:51 PM

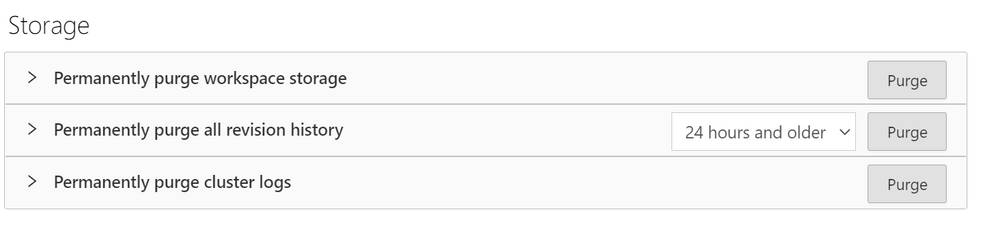

Hi! I didn't know that, Purging right now, is there a way to schedule that so logs are retained for less time? Maybe I want to maintain the last 7 days for everything?

Thanks!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-18-2022 07:10 AM

During office hours, I asked one year ago to add purge cluster logs to API, and it was not even considered. I thought to set selenium to do that.

You can limit logging for the cluster by playing with log4j, for example, to put something like .sh script below on dbfs as the start script for the cluster (you need additionally specify log properties to be adjusted for drivers and executors):

#!/bin/bash

echo "Executing on Driver: $DB_IS_DRIVER"

if [[ $DB_IS_DRIVER = "TRUE" ]]; then

LOG4J_PATH="/home/ubuntu/databricks/spark/dbconf/log4j/driver/log4j.properties"

else

LOG4J_PATH="/home/ubuntu/databricks/spark/dbconf/log4j/executor/log4j.properties"

fi

echo "Adjusting log4j.properties here: ${LOG4J_PATH}"

echo "log4j.<custom-prop>=<value>" >> ${LOG4J_PATH}In the notebook, you can disable logging by using:

sc.setLogLevel("OFF");Additionally, for cluster config, you can set for delta files:

spark.databricks.delta.logRetentionDuration 3 days

spark.databricks.delta.deletedFileRetentionDuration 3 daysOptions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-28-2022 08:38 PM

Not the best solution, will not implement something like this on prod, but it's the best answer, thanks!

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- What is the bestway to handle huge gzipped file dropped to S3 ? in Data Engineering

- Unable to write to S3 bucket from Databricks using boto3 in Data Engineering

- Volume Limitations in Data Engineering

- Avoiding Duplicate Ingestion with Autoloader and Migrated S3 Data in Data Engineering

- Can i get 2 different type of Source file (csv and Json) in a single build in Data Engineering