Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- failed to initialise azure-event-hub with azure AA...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

failed to initialise azure-event-hub with azure AAD(service principal)

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-16-2023 11:52 AM

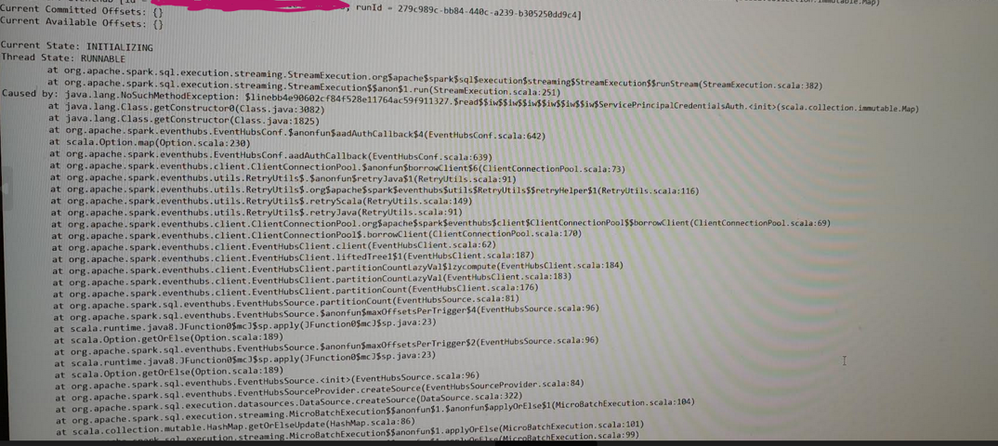

We have been trying to authenticate azure-event-hub with azure AD(service principal) instead of shared access key(connection string) and read events from azure-event-hub and it is failing to initialise azure-event-hubs. And throwing no such method exception.

I am attaching the screenshot for the full error.

Following are my configurations:

- I am using databricks run time 10.4 LTS.

- Azure service principle has 'Azure event hub data receiver' permission

- installed following dependencies on 10.4 LTS

com.microsoft.azure:azure-eventhubs-spark_2.12:2.3.22

com.microsoft.azure:msal4j:1.10.1

4.Trying to implement solutions given in the repositories below.

https://github.com/Azure/azure-event-hubs- spark/blob/master/docs/use-aad-authentication-to-connect-eventhubs.md &

@Alex Ott please could you help with this

Labels:

8 REPLIES 8

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-18-2023 10:36 AM

Just follow instructions from my repository (don't use azure docs):

- Build with correct profile

- install correct dependencies

- configure as per readme

I've tested it on 10.4, and everything worked just fine

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-18-2023 11:02 AM

@Alex Ott thank you for your reply, I am working on scala implementation for this, any suggestions in that case ?

I have added ServicePrincipalCredentialsAuth and ServicePrincipalAuthBase as a normal classes in scala native instead of creating a separate jar for these 2 classes and packaged them as a part of my project jar

And using the below code for configuring.

val params: Map[String, String] = Map("authority" -> dbutils.secrets.get(scope = "nykvsecrets", key = "ehaadtesttenantid"),

"clientId" -> dbutils.secrets.get(scope = "nykvsecrets", key = "ehaadtestclientid"),

"clientSecret" -> dbutils.secrets.get(scope = "nykvsecrets", key = "ehaadtestclientsecret"))

val connectionString = ConnectionStringBuilder()

.setAadAuthConnectionString(new URI("your-ehs-endpoint"), "your-ehs-name")

.build

val ehConf = EventHubsConf(connectionString)

.setConsumerGroup("consumerGroup")

.setAadAuthCallback(new ServicePrincipalCredentialsAuth(params))

.setAadAuthCallbackParams(params)

any issue you see with this ?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-18-2023 03:50 PM

@Alex Ott do we have the equivalent scala repo for this ?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-20-2023 12:31 AM

No, I specifically selected Java as implementation language for my library to avoid problems with Scala versions, etc.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-21-2023 09:43 AM

I think it is working now. what i was trying to do, which is not right in this case was trying to test this code directly in the databricks scala notebook with just installing libraries com.microsoft.azure:azure-eventhubs-spark_2.12:2.3.22 & com.microsoft.azure:msal4j:1.10.1 on the cluster and it was failing becuase there were few missing compile time libraries/packages that comes with azure-event-hubs-spark and msal4j libraries.

And for scala i had to make minor syntactical changes. And it started working.

thank you very much.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-07-2023 11:26 AM

Hey Ravi,

Can you please provide the steps.! or any sample code in Scala.

We are also planning to do the same and facing issues.

Thanks,

Arun G

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

a month ago

@Ravikumashi , Hi Ravi, Could you please provide me the sample working code how you managed this to work in scala and how you added these classes ServicePrincipalCredentialsAuth and ServicePrincipalAuthBase.

I am currently trying to stream event hub data using service principle and failing to do so.

Thanks,

Swathi.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

a month ago - last edited a month ago

I have added ServicePrincipalCredentialsAuth and ServicePrincipalAuthBase as a normal classes instead of creating a separate jar for these 2 classes and packaged them as a part of my project jar.

And used the below code for configuring/initializing the eventHub with SPN credentials.

val params: Map[String, String] = Map("authority" -> dbutils.secrets.get(scope = "databricks-secretes-scope", key = "ehaadtesttenantid"),

"clientId" -> dbutils.secrets.get(scope = "databricks-secretes-scope", key = "ehaadtestclientid"),

"clientSecret" -> dbutils.secrets.get(scope = "databricks-secretes-scope", key = "ehaadtestclientsecret"))

val yourEventHubEndpoint = "sb://<EventHubsNamespaceName>.servicebus.windows.net/"

val connectionString = ConnectionStringBuilder()

.setAadAuthConnectionString(new URI($"yourEventHubEndpoint"), "yourEventHubsInstance")

.build

val ehConf = EventHubsConf(connectionString)

.setConsumerGroup("YourconsumerGroupForEventHubInstance")

.setAadAuthCallback(new ServicePrincipalCredentialsAuth(params))

.setAadAuthCallbackParams(params)

val inputStreamdf = spark

.readStream

.format("eventhubs")

.options(ehConf.toMap())

.load()

And you can copy the ServicePrincipalCredentialsAuth and ServicePrincipalAuthBase class files from the repository below and in my side i have just changed variable declaration alone to scala sytle and kept the remaining as is.

https://github.com/alexott/databricks-playground/tree/main/kafka-eventhubs-aad-auth/src/main/java/ne...

more details on how to stream data to and from eventHubs can be found here https://github.com/Azure/azure-event-hubs-spark/blob/master/docs/PySpark/structured-streaming-pyspar...

Hope this helps.

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.