Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Hi Experts , I am new to databricks. I want to kn...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-13-2022 08:43 PM

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-13-2022 10:24 PM

You can write your ETL logic in notebooks, run the notebook over a cluster and write the data to a location where your S3 bucket is mounted.

Next, you can register that table with Hive MetaStore and access the same table in Databricks SQL.

To see the table, go to Data tab and select your schema/database to see registered tables.

Two ways to do this:

Option 1:

df.write.option("path",<s3-path-of-table>).saveAsTable(tableName)Option 2

%python

df.write.save(<s3-path-of-table>)

%sql

CREATE TABLE <table-name>

USING DELTA

LOCATION <s3-path-of-table>:

7 REPLIES 7

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-13-2022 10:24 PM

You can write your ETL logic in notebooks, run the notebook over a cluster and write the data to a location where your S3 bucket is mounted.

Next, you can register that table with Hive MetaStore and access the same table in Databricks SQL.

To see the table, go to Data tab and select your schema/database to see registered tables.

Two ways to do this:

Option 1:

df.write.option("path",<s3-path-of-table>).saveAsTable(tableName)Option 2

%python

df.write.save(<s3-path-of-table>)

%sql

CREATE TABLE <table-name>

USING DELTA

LOCATION <s3-path-of-table>:

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-14-2022 03:05 AM

@Aman Sehgal so basically you are telling to write the transformed data from Databricks pyspark into ADLS gen2 and then use Data bricks SQL analytics to do below what you said ...

- %sql

- CREATE TABLE <table-name>

- USING DELTA

- LOCATION <s3-path-of-table>

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-14-2022 05:11 AM

Right.. Databricks is a platform to perform transformations.. Ideally your should either mount s3 bucket or ADLS gen 2 location in DBFS..

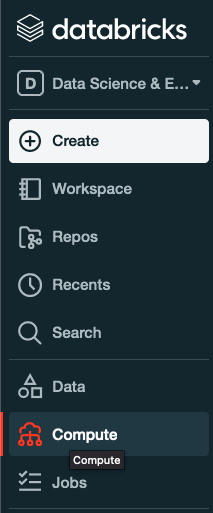

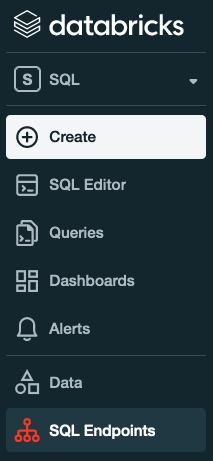

Read/Write/Update/Delete your data and to run SQL analytics from SQL tab, you'll have to register a table and start an endpoint..

You can also query the data via notebooks by using SQL in a cell. The only difference is, you'll have to spin up a cluster instead of an endpoint.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-15-2022 01:09 AM

@Aman Sehgal you are making me confused ....we need to spin up the cluster if we use SQL end point right ?

and Can we not use magic commands "%Sql" within same notebook to write the pyspark data to SQL end point as table ?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-15-2022 05:58 AM

When you're in SQL tab in workspace, then you need to spin up a SQL Endpoint. After spinning an end point, go to Queries tab and you can write a SQL query to query your tables.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-18-2022 08:03 AM

@Aman Sehgal Can we write data from data engineering workspace to SQL end point in databricks?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-19-2022 03:39 PM

You can write data to a table (eg. default.my_table) and consume data from same table using SQL end point.

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- udf in databricks in Data Engineering

- IP address for accessing external SFTP server in Data Engineering

- Autoloader: Read old version of file. Read modification time is X, latest modification time is X in Data Engineering

- External locations in Data Governance

- Bug with enabling UniForm Data Format? in Data Engineering