Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Problem with Databricks JDBC connection: Error occ...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-02-2022 08:14 AM

I have a Java program like this to test out the Databricks JDBC connection with the Databricks JDBC driver.

Connection connection = null;

try {

Class.forName(driver);

connection = DriverManager.getConnection(url, username, password);

if (connection != null) {

System.out.println("Connection Established");

} else {

System.out.println("Connection Failed");

}

Statement statement = connection.createStatement();

ResultSet rs = statement.executeQuery("select * from standard_info_service.daily_transactions");

while (rs.next()) {

System.out.print("created_date: " + rs.getInt("created_date") + ", ");

System.out.println("daily_transactions: " + rs.getInt("daily_transactions"));

}

} catch (Exception e) {

System.out.println(e);

}This program, however, throws an error like this:

Connection Established

WARNING: sun.reflect.Reflection.getCallerClass is not supported. This will impact performance.

java.sql.SQLException: [Simba][SparkJDBCDriver](500618) Error occured while deserializing arrow data: sun.misc.Unsafe or java.nio.DirectByteBuffer.<init>(long, int) not availableWhat will be the solution?

Labels:

- Labels:

-

Databricks JDBC

-

Jdbc

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-23-2022 05:19 PM

Hi @Jose Gonzalez ,

This similar issue in snowflake in JDBC is a good reference, I was able to get this to work in Java OpenJDK 17 by having this JVM option specified:

--add-opens=java.base/java.nio=ALL-UNNAMED

Although I came across another issue with using apache DHCP to connect to Databricks SQL endpoint:

Caused by: java.sql.SQLFeatureNotSupportedException: [Simba][JDBC](10220) Driver does not support this optional feature.

at com.simba.spark.exceptions.ExceptionConverter.toSQLException(Unknown Source)

at com.simba.spark.jdbc.common.SConnection.setAutoCommit(Unknown Source)

at com.simba.spark.jdbc.jdbc42.DSS42Connection.setAutoCommit(Unknown Source)

at org.apache.commons.dbcp2.DelegatingConnection.setAutoCommit(DelegatingConnection.java:801)

at org.apache.commons.dbcp2.DelegatingConnection.setAutoCommit(DelegatingConnection.java:801)

The same problem occurred after I switched to Hikari.

Finally, I got it working by just using Basic DataSource and set auto-commit to False. BasicDataSource is not suitable for production though, would there be a new driver release that can handle this better?

13 REPLIES 13

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-02-2022 11:39 PM

Hi @Tony Zhou , Can you specify the versions which you're using?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-03-2022 11:19 AM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-09-2022 06:09 PM

This error is mentioned in Spark documentation - https://spark.apache.org/docs/latest/, looks like this is specific to the version of Java and can be avoid by having the mentioned properties set

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-09-2022 08:52 PM

Hi @Alice Hung , Thank you for your contribution.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-09-2022 08:51 PM

Hi @Tony Zhou , It's mentioned in the doc.

Spark runs on Java 8/11, Scala 2.12/2.13, Python 3.6+ and R 3.5+.

Python 3.6 support is deprecated as of Spark 3.2.0. Java 8 prior to version 8u201 support is deprecated as of Spark 3.2.0.

For the Scala API, Spark 3.2.1 uses Scala 2.12.

You will need to use a compatible Scala version (2.12.x).

Note:-

For Python 3.9, Arrow optimisation and pandas UDFs might not work due to the supported Python versions in Apache Arrow.

Please refer to the latest Python Compatibility page.

For Java 11, -Dio.netty.tryReflectionSetAccessible=true is required additionally for Apache Arrow library.

This prevents java.lang.UnsupportedOperationException: sun.misc.Unsafe or java.nio.DirectByteBuffer.(long, int) not available when Apache Arrow uses Netty internally.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-22-2022 09:50 AM

Hi @Tony Zhou ,

Just a friendly follow-up. Did @Kaniz Fatma 's response helped you to resolve this issue? if not, please share more details, like the full error stack trace and some code snippets.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-23-2022 05:19 PM

Hi @Jose Gonzalez ,

This similar issue in snowflake in JDBC is a good reference, I was able to get this to work in Java OpenJDK 17 by having this JVM option specified:

--add-opens=java.base/java.nio=ALL-UNNAMED

Although I came across another issue with using apache DHCP to connect to Databricks SQL endpoint:

Caused by: java.sql.SQLFeatureNotSupportedException: [Simba][JDBC](10220) Driver does not support this optional feature.

at com.simba.spark.exceptions.ExceptionConverter.toSQLException(Unknown Source)

at com.simba.spark.jdbc.common.SConnection.setAutoCommit(Unknown Source)

at com.simba.spark.jdbc.jdbc42.DSS42Connection.setAutoCommit(Unknown Source)

at org.apache.commons.dbcp2.DelegatingConnection.setAutoCommit(DelegatingConnection.java:801)

at org.apache.commons.dbcp2.DelegatingConnection.setAutoCommit(DelegatingConnection.java:801)

The same problem occurred after I switched to Hikari.

Finally, I got it working by just using Basic DataSource and set auto-commit to False. BasicDataSource is not suitable for production though, would there be a new driver release that can handle this better?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-24-2022 08:58 AM

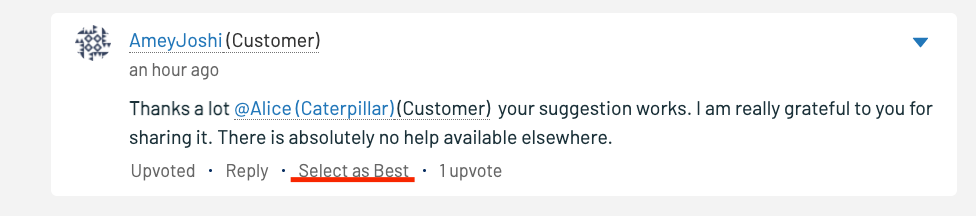

Thanks a lot @Alice Hung your suggestion works. I am really grateful to you for sharing it. There is absolutely no help available elsewhere.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-24-2022 09:04 AM

Hi @Amey Joshi , Happy to know that it helped and you're able to resolve it. Would you like to mark @Alice Hung 's answer as the best answer ?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-24-2022 09:47 AM

Definitely, I would want to. But I can't find an option to mark it as the best.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-24-2022 09:58 AM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-24-2022 10:07 AM

I am sorry @Kaniz Fatma but I don't see that option available to me. If you see it, kindly use it on my behalf.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-24-2022 08:04 PM

Hi @Amey Joshi , It's weird that you cannot see that option. Let me get back to you on this. In the meanwhile, I'll select the best answer for you 😊 .

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- connect timed out error - Connecting to SQL Server from Databricks in Data Engineering

- Connecting to MuleSoft from Databricks in Data Engineering

- Databricks SQL connectivity in Python with Service Principals in Administration & Architecture

- Proper way to collect Statement ID from JDBC Connection in Administration & Architecture

- AzureDevOps and Databricks Connection using managed identity or service principal in Data Engineering