Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Delta Live Table Pipeline with Multiple Notebooks

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-07-2022 01:53 PM

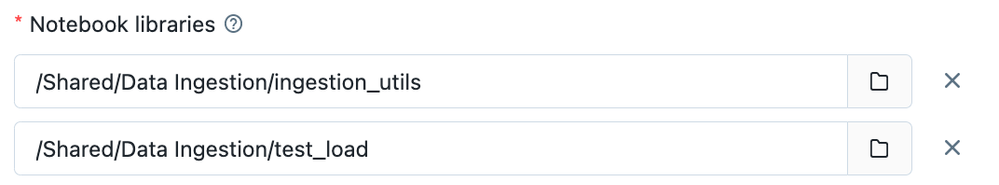

I have two notebooks created for my Delta Live Table pipeline. The first is a utils notebook with functions I will be reusing for other pipelines. The second contains my actual creation of the delta live tables. I added both notebooks to the pipeline settings

Labels:

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-08-2022 11:54 AM

Hi @Dave Wilson %run or dbutils is not supported in DLT. This is intentionally disabled because DLT is declarative and we cannot perform data movement on our own.

To answer your first query, there is unfortunately no option to make the utils notebook run first. The only option is to combine utils and your main notebooks together. This does not address the reusability aspect in DLT and we have raised this feature request with the product team. The engineering team is working internally to address this issue. We can soon expect a feature that would address this usecase.

Thanks.

4 REPLIES 4

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-08-2022 06:43 AM

Per another question we are unable to use either magic commands or dbutils.notebook.run with the pro level databricks account or Delta Live Tables. Are there any other solutions for utilizing generic functions from other notebooks within a Delta Live Table pipeline?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-08-2022 11:54 AM

Hi @Dave Wilson %run or dbutils is not supported in DLT. This is intentionally disabled because DLT is declarative and we cannot perform data movement on our own.

To answer your first query, there is unfortunately no option to make the utils notebook run first. The only option is to combine utils and your main notebooks together. This does not address the reusability aspect in DLT and we have raised this feature request with the product team. The engineering team is working internally to address this issue. We can soon expect a feature that would address this usecase.

Thanks.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-23-2023 06:36 AM

Hi @Vivian Wilfred and @Dave Wilson we solved our reusability code with repos and pointing the code to our main code:

sys.path.append(os.path.abspath('/Workspace/Repos/[your repo]/[folder with the python scripts'))

from your_class import *

It just works if your reusable code is in python. Also depending on what you want to do we noticed that DLT is always executed as the last piece of the code no matter what is the position in the script

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-02-2023 07:43 AM

Could you help me with explain this in detail?

Lets i have notebook abc which is reusable and pqr is the one i will be mentioning in dlt pipeline.

how do i call functions from abc pipeline in pqr?

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- unity catalog system.access.audit lag in Data Engineering

- Variables passed from ADF to Databricks Notebook Try-Catch are not accessible in Data Engineering

- Multiple Notebooks Migration from one workspace to another without using Git. in Data Engineering

- Errors When Using R on Unity Catalog Clusters in Data Engineering

- Can't run Delta Live Tables pipeline while using Mosaic in Data Engineering