Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Governance

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Governance

- Databricks-connect version 13.0.0 throws Exception...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-27-2023 02:40 AM

I'm trying to connect to a cluster with Runtime 13.0 and Unity Catalog through databricks-connect version 13.0.0 (for Python). The spark session seems to initialize correctly but anytime I try to use it, I get the following error:

{SparkConnectGrpcException}<_MultiThreadedRendezvous of RPC that terminated with:

status = StatusCode.INVALID_ARGUMENT

details = "Missing required field 'UserContext' in the request."

debug_error_string = "UNKNOWN:Error received from peer {created_time:"2023-04-27T09:32:18.370432594+00:00", grpc_status:3, grpc_message:"Missing required field \'UserContext\' in the request."}"I've tried starting the session through a configuration profile, through the SPARK_REMOTE environment variable and through plain code, but all these options return the same result.

Any ideas are appreciated

Labels:

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-02-2023 10:44 PM

Hello @Achilleas Voutsas @Einav Bezalel , I managed to run it by setting an environmental variable called USER to any value before starting the DatabricksSession. That is, in python:

os.environ["USER"] = "anything"

config = Config(profile="DEFAULT")

spark = DatabricksSession.builder.sdkConfig(config).getOrCreate()

10 REPLIES 10

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-27-2023 05:55 AM

@Carla F what's your python version, did you get a chance to see if any pre-requisites missing like

The minor version of your client Python installation must be the same as the minor Python version of your Databricks cluster. Databricks Runtime 13.0 ML and Databricks Runtime 13.0 both use Python 3.10.

also please check all requirements met

https://docs.databricks.com/dev-tools/databricks-connect.html

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-27-2023 05:55 AM

Hi, thanks for the response. I'm using python version 3.10 and runtime 13.0, so that should not be the issue

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-27-2023 06:02 AM

also, i've checked the rest of the requirements and they are all met as far as I see

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-30-2023 03:33 AM

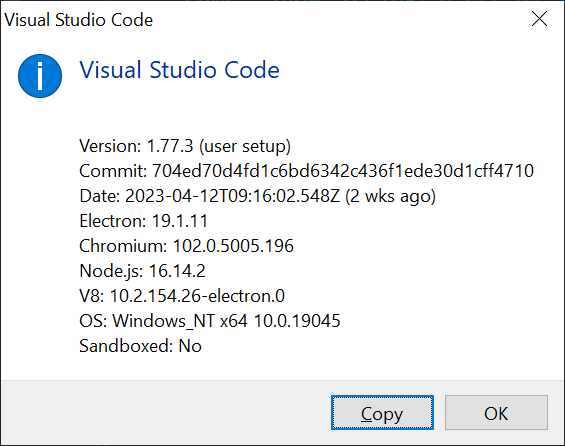

I also experience the same issue with a Windows 10 laptop, running python 3.10.10. See details of the VS Code setup and Windows vesion.

Python 3.10.10 (tags/v3.10.10:aad5f6a, Feb 7 2023, 17:20:36) [MSC v.1929 64 bit (AMD64)] on win32

Python libraries

asttokens==2.2.1

backcall==0.2.0

certifi==2022.12.7

charset-normalizer==3.1.0

click==8.1.3

colorama==0.4.6

comm==0.1.3

databricks-cli==0.17.6

databricks-connect==13.0.0

databricks-sdk==0.0.7

debugpy==1.6.7

decorator==5.1.1

executing==1.2.0

googleapis-common-protos==1.59.0

grpcio==1.54.0

grpcio-status==1.54.0

idna==3.4

ipykernel==6.22.0

ipython==8.13.1

jedi==0.18.2

jupyter_client==8.2.0

jupyter_core==5.3.0

matplotlib-inline==0.1.6

nest-asyncio==1.5.6

numpy==1.24.3

oauthlib==3.2.2

packaging==23.1

pandas==2.0.1

parso==0.8.3

pickleshare==0.7.5

platformdirs==3.5.0

prompt-toolkit==3.0.38

protobuf==4.22.3

psutil==5.9.5

pure-eval==0.2.2

py4j==0.10.9.7

pyarrow==11.0.0

Pygments==2.15.1

PyJWT==2.6.0

python-dateutil==2.8.2

pytz==2023.3

pywin32==306

pyzmq==25.0.2

requests==2.29.0

six==1.16.0

stack-data==0.6.2

tabulate==0.9.0

tornado==6.3.1

traitlets==5.9.0

tzdata==2023.3

urllib3==1.26.15

wcwidth==0.2.6

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-30-2023 07:45 AM

Same issue for me. Databricks Runtime 13.0 , python 3.10.11, single user Access mode

for the cluster, and unity catalog is enabled for the workspace.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-02-2023 10:44 PM

Hello @Achilleas Voutsas @Einav Bezalel , I managed to run it by setting an environmental variable called USER to any value before starting the DatabricksSession. That is, in python:

os.environ["USER"] = "anything"

config = Config(profile="DEFAULT")

spark = DatabricksSession.builder.sdkConfig(config).getOrCreate()Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-03-2023 04:03 AM

I guess there is a bug in the Windows version.. in macOS I do not have this issue.

Many thanks @Carla F !!!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-03-2023 04:28 AM

Hi all, I had the same issue (on Windows) and managed to get it working by specifying a user id in the spark remote.

There's the hard-coded way:

from databricks.connect import DatabricksSession

spark = DatabricksSession.builder.remote( "sc://<workspace-instance-name>:443/;token=<access-token-value>;x-databricks-cluster-id=<cluster-id>;user_id=123123" ).getOrCreate()Or with an environment variable:

set SPARK_REMOTE=sc://<databricks_workspace_name>:443;token=<token>;x-databricks-cluster-id=<cluster-id>;user_id=123123Or similar in python. The actual value (123123) of the user_id can be whatever.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-03-2023 04:36 AM

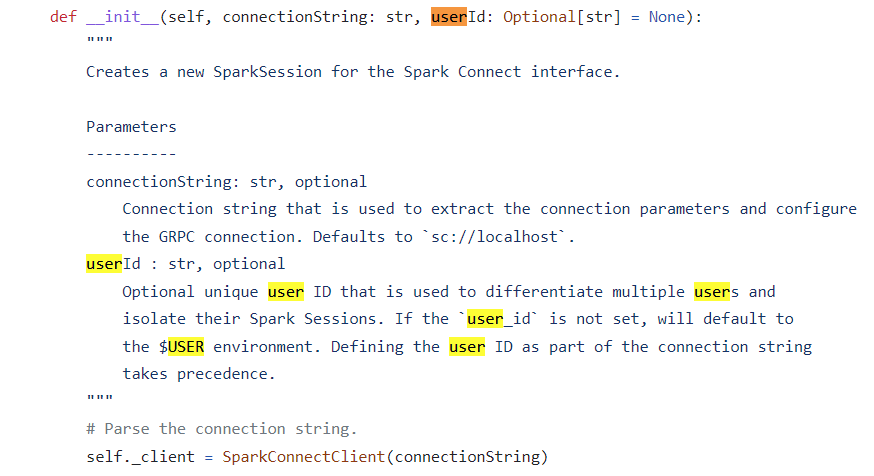

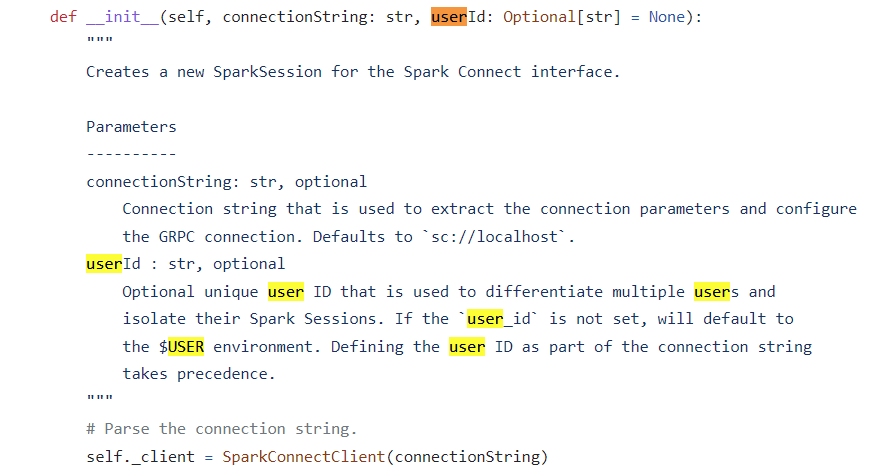

Thanks @Martin Heemskerk , I think both our options take the same effect. It's a thing in the spark connect session, but it "should" be optional.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-23-2023 08:43 AM

I have the same error up to DBR 13.3 LTS. When I upgraded it to 14.0, I was then able to connect with my databricks compute from my local environment.

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.