Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- read csv directly from url with pyspark

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-29-2021 04:08 AM

I would like to load a csv file directly to a spark dataframe in Databricks. I tried the following code :

url = "https://opendata.reseaux-energies.fr/explore/dataset/eco2mix-national-tr/download/?format=csv&timezone=Europe/Berlin&lang=fr&use_labels_for_header=true&csv_separator=%3B"

from pyspark import SparkFiles

spark.sparkContext.addFile(url)

df = spark.read.csv(SparkFiles.get("eco2mix-national-tr.csv"), header=True, inferSchema= True)and I got the following error :

Path does not exist: dbfs:/local_disk0/spark-c03e8325-0ab6-4c2e-bffb-c9d290283b31/userFiles-a507dd96-cc63-4e47-9b0f-44d2a940bb10/eco2mix-national-tr.csvThanks

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-29-2021 07:46 AM

ok so I tested it myself, and I think I found the issue:

the addfile() will not put a file called 'eco2mix-national-tr.csv', but a file called 'download'.

You can check this by using the %sh magic command and then

ls "/local_disk0/spark-.../userFiles-/"

You will get a list of files, no eco2mix but a 'download' file.

To see the contents of the download file, you can do a cat command:

%sh

cat "/local_disk0/spark-.../userFiles-.../download"

You will see the contents.

Next you have to read it with spark.read.csv AND the file:// prefix.

So this makes:

url = "https://opendata.reseaux-energies.fr/explore/dataset/eco2mix-national-tr/download/?format=csv"

from pyspark import SparkFiles

sc.addFile(url)

path = SparkFiles.get('download')

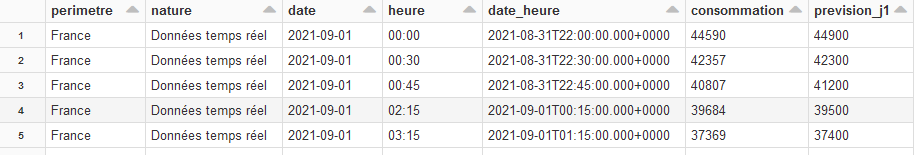

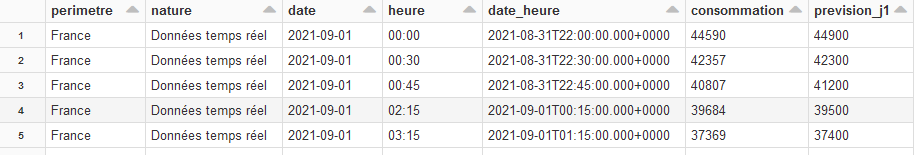

df = spark.read.csv("file://" + path, header=True, inferSchema= True, sep = ";")This gives:

It is always a good idea when working with local files to actually look at the directory in question and do a cat of the file in question.

8 REPLIES 8

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-29-2021 04:27 AM

Check this:

https://stackoverflow.com/questions/57014043/reading-data-from-url-using-spark-databricks-platform

Basically adding "file://" to your path.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-29-2021 04:45 AM

I've already read this post and tried it but this was not working either :

Path does not exist: file:/local_disk0/spark-48fd5772-d1a9-40f2-a2f2-bcad38962ed6/userFiles-0298f7e7-105c-4c8d-a845-0975edd378a0/eco2mix-national-tr.csvOptions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-29-2021 07:46 AM

ok so I tested it myself, and I think I found the issue:

the addfile() will not put a file called 'eco2mix-national-tr.csv', but a file called 'download'.

You can check this by using the %sh magic command and then

ls "/local_disk0/spark-.../userFiles-/"

You will get a list of files, no eco2mix but a 'download' file.

To see the contents of the download file, you can do a cat command:

%sh

cat "/local_disk0/spark-.../userFiles-.../download"

You will see the contents.

Next you have to read it with spark.read.csv AND the file:// prefix.

So this makes:

url = "https://opendata.reseaux-energies.fr/explore/dataset/eco2mix-national-tr/download/?format=csv"

from pyspark import SparkFiles

sc.addFile(url)

path = SparkFiles.get('download')

df = spark.read.csv("file://" + path, header=True, inferSchema= True, sep = ";")This gives:

It is always a good idea when working with local files to actually look at the directory in question and do a cat of the file in question.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-30-2021 01:50 AM

Thankyou @Werner Stinckens

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-29-2021 09:22 AM

Great, this is working. Thank you.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-29-2021 03:01 PM

@Bertrand BURCKER - If @Werner Stinckens answered your question, would you mark his as the best answer? That will help others find the solution quickly.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-26-2021 06:16 AM

Hi ,

You can also use the following.

import org.apache.commons.io.IOUtils // jar will be already there in spark cluster no need to worry

import java.net.URL

val urlfile=new URL("https://people.sc.fsu.edu/~jburkardt/data/csv/airtravel.csv")

val testDummyCSV = IOUtils.toString(urlfile,"UTF-8").lines.toList.toDS()

val testcsv = spark

.read.option("header", true)

.option("inferSchema", true)

.csv(testDummyCSV)

display(testcsv)Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-12-2023 06:49 PM

I know it's a 2 years old thread but I needed to find a solution to this very thing today. I had one notebook using SparkContext

from pyspark import SparkFiles

from pyspark.sql.functions import *

sc.addFile(url)

But according to the runtime 14 release notes: https://learn.microsoft.com/en-gb/azure/databricks/release-notes/runtime/14.0#breaking-changes sc will stop working. IOUtils hasn't been mentioned.

The current official way is:

I hope it helps.

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- exposing RAW files using read_files based views, partition discovery and skipping, performance issue in Warehousing & Analytics

- SparkContext lost when running %sh script.py in Data Engineering

- SQL function refactoring into Databricks environment in Data Engineering

- [delta live tabel] exception: getPrimaryKeys not implemented for debezium in Data Engineering

- I have to run the notebook in concurrently using process pool executor in python in Data Engineering