Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Error when using DLT with SCD type 1

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Error when using DLT with SCD type 1

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-13-2022 06:29 AM

I'm trying to load data using DLT and SCD 1 and am running into the error message "Detected a data update in the source table at version x. This is currently not supported. If you'd like to ignore updates, set the option 'ignoreChanges' to 'true'.

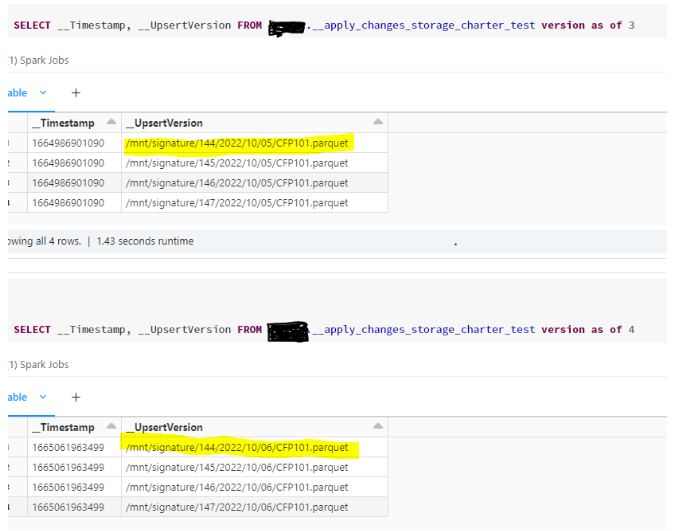

I have one DLT named charter and another named branch. The branch table has a relationship to charter, so I join to it in the pipeline to lookup the charter_key. The initial load of the data runs just fine, but when I run the incremental portion the next day, that is when I get the error message. This data is pretty static, so no data actually changed between the initial load and the incremental load. However, in the charter DLT table that is created, __apply_changes_storage_charter the __UpsertVersion has the most recent file name and an updated __Timestamp. Is that where it is saying that it has detected a change?

CREATE OR REFRESH STREAMING LIVE TABLE charter

(charter_key bigint GENERATED ALWAYS AS IDENTITY (START WITH 1 INCREMENT BY 1)

,charter_number int

,charter_name string

,charter_address1 string

,charter_address2 string

,charter_zip_code string)

APPLY CHANGES INTO LIVE.charter

FROM STREAM(LIVE.charter_bronze)

KEYS (charter_number)

SEQUENCE BY file_name

COLUMNS * EXCEPT (file_name)

CREATE OR REFRESH TEMPORARY STREAMING LIVE TABLE branch_stage AS

SELECT c.charter_key

,b.branch_number

,b.branch_name

,b.branch_address1

,b.branch_address2

,b.branch_zip_code

,b.file_name

FROM STREAM(LIVE.charter) c

INNER JOIN STREAM(LIVE.branch_bronze) b

ON c.charter_number = b.charter_number

CREATE OR REFRESH STREAMING LIVE TABLE branch

(branch_key bigint GENERATED ALWAYS AS IDENTITY (START WITH 1 INCREMENT BY 1)

,charter_key bigint

,branch_number int

,branch_name string

,branch_address1 string

,branch_address2 string

,branch_zip_code string)

APPLY CHANGES INTO LIVE.branch

FROM STREAM(LIVE.branch_stage)

KEYS (charter_key, branch_number)

SEQUENCE BY file_name

COLUMNS * EXCEPT (file_name)I've read that it treats the files as append only, but then how exactly does SCD work? In the pipeline I have both of these set to true:

pipelines.applyChangesPreviewEnabled

spark.databricks.delta.schema.autoMerge.enabled

Any help is appreciated.

Labels:

- Labels:

-

Delta Live Tables

-

DLT

5 REPLIES 5

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-18-2022 05:13 AM

The problem is that you join with the table made by APPLY CHANGES and that table can theoretically have updated records.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-18-2022 07:36 AM

@Hubert Dudek That was my thinking as well as I started to dig into it more. Do you know what the recommended solution would be when trying to use APPLY CHANGES tables in downstream tables?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-18-2022 10:52 AM

when table is published in metastore (target option in the pipeline) for Python I just used spark.read.table() with join of dlt table, so I bet in SQL it would be something like:

FROM STREAM(LIVE.branch_bronze) b

INNER JOIN TARGET_DB.charter cOptions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-27-2022 06:31 AM

Thanks @Hubert Dudek. I was at this same spot before, but I can't put that reference in the same notebook or even the same pipeline. If I put it in the same notebook then on the initial load it fails since the target_db.charter table doesn't exist. If I put them in separate notebooks but the same pipeline then it doesn't discover the dependency. So I would have to have multiple pipelines, which I was hoping to avoid.

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-20-2022 11:46 PM

Hi @Andy Bolk

Hope all is well!

Just wanted to check in if you were able to resolve your issue and would you be happy to share the solution or mark an answer as best? Else please let us know if you need more help.

We'd love to hear from you.

Thanks!

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- while registering model I am getting error: AssertionError: in Machine Learning

- An error occurred when loading Jobs and Workflows App. in Data Engineering

- Workflow UI broken after creating job via the api in Data Engineering

- Error during deserializing protobuf data in Data Engineering

- Hard reset programatically in Administration & Architecture