Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Getting Spark & Scala version in Cluster node init...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-16-2021 02:11 AM

Hi there,

I am developing a Cluster node initialization script (https://docs.gcp.databricks.com/clusters/init-scripts.html#environment-variables) in order to install some custom libraries.

Reading the docs of Databricks we can get some environment variables with data related with the current running cluster node.

But I need to figure out what Spark & Scala version is currently been deployed. Is this possible?

Thanks in advance

Regards

Labels:

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-16-2021 06:16 PM

Hm, this is a hacky idea, maybe there is a better way, but you could

ls /databricks/jars/spark*and parse the results to get the version of Spark and Scala. You'll see files like spark--command--command-spark_3.1_2.12_deploy.jar containing the versions.

18 REPLIES 18

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-16-2021 07:00 AM

Hi @A Huarte ! My name is Kaniz, and I'm the technical moderator here. Great to meet you, and thanks for your question! Let's see if your peers in the community have an answer to your question first. Or else I will get back to you soon. Thanks.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-16-2021 08:32 AM

Hi Kaniz, thank you very much. For sure I will learn very much in this forum.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-16-2021 10:12 AM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-16-2021 10:22 AM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-16-2021 10:35 AM

Hi @Prabakar Ammeappin Thank you very much for your response,

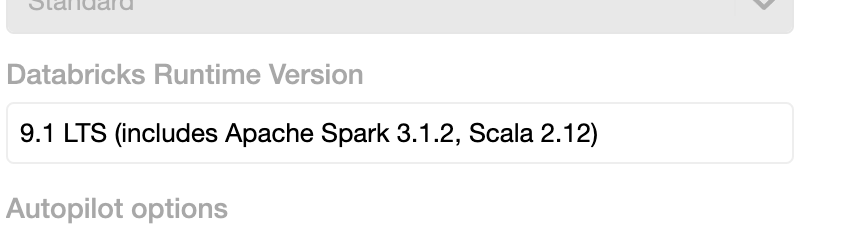

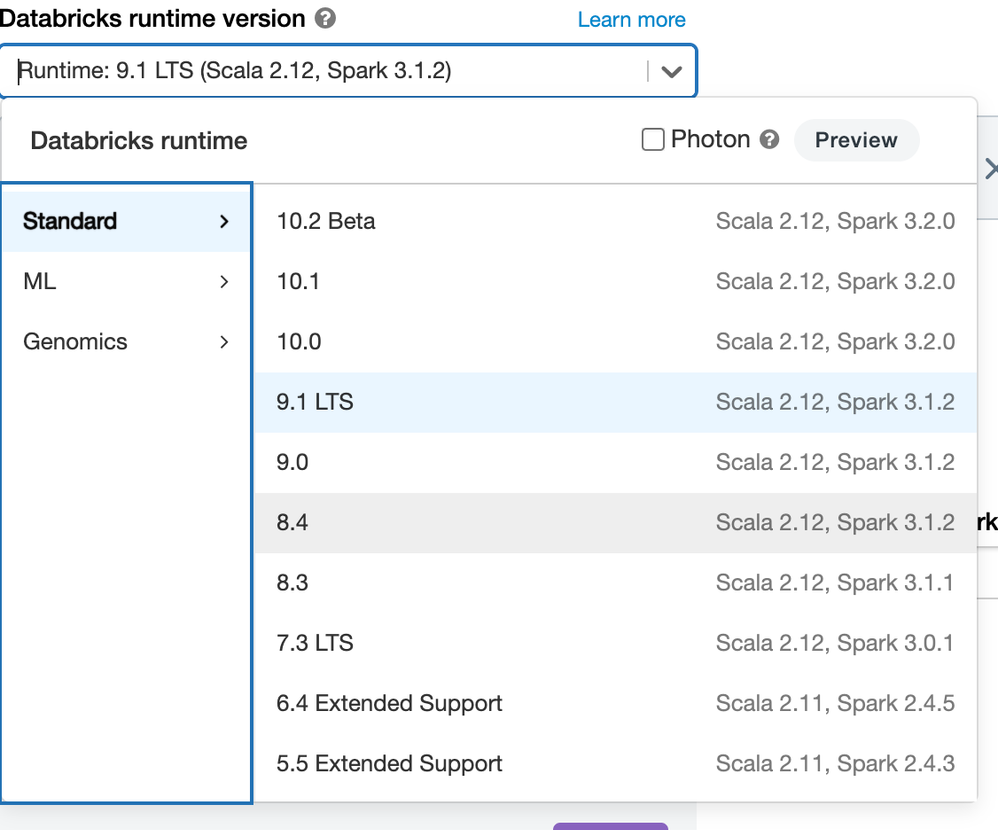

but I mean how I can get this info in a script. I am trying to develop this sh init script for several Clusters with different Databricks runtimes.

I tried it searching files in that script but I did not find any "*spark*.jar" file from where to extract the current version of the runtime (Spark & Scala version).

When the cluster is already started there are files with this pattern, but in the moment that the init script is executed it seems that pyspark is not installed yet.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-16-2021 10:37 AM

I know that Databricks CLI tool is available, but it is not configured when the init script is running.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-16-2021 06:16 PM

Hm, this is a hacky idea, maybe there is a better way, but you could

ls /databricks/jars/spark*and parse the results to get the version of Spark and Scala. You'll see files like spark--command--command-spark_3.1_2.12_deploy.jar containing the versions.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-17-2021 02:23 AM

Hi @Sean Owen thanks four your reply,

your idea can work, but unfortunatelly there is any filename with the full version name. I am missing the minor part:

yyyyyy_spark_3.2_2.12_xxxxx.jar -> Spark version is really 3.2.0

I have configured databricks CLI to get metadata of the cluster and I get this output:

{

"cluster_id": "XXXXXXXXX",

"spark_context_id": YYYYYYYYYYYY,

"cluster_name": "Devel - Geospatial",

"spark_version": "10.1.x-cpu-ml-scala2.12", ##<------!!!!

....

}

"spark_version" property does not contain info about the spark version but about the DBR :-(, any thoughts?

Thanks in advance

regards

Alvaro

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-17-2021 05:16 AM

Do you need such specific Spark version info, why? should not matter for user applications

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-17-2021 05:31 AM

I am trying to install Geomesa, from: https://mvnrepository.com/artifact/org.locationtech.geomesa/geomesa-gt-spark-runtime

or

from:

https://github.com/locationtech/geomesa/releases

I think I need the exact release.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-17-2021 06:32 AM

I doubt it's sensitive to a minor release, why?

But you also control what DBR/Spark version you launch the cluster with

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-17-2021 08:53 AM

Many thanks @Sean Owen I am going to apply your advice, I am not going to write a generic init script that figures out everything, but a specific version of it for each Cluster type, really we only have 3 DBR types.

Thank you very much for your support

Regards

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-27-2021 09:17 AM

@A Huarte - How did it go?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-28-2021 03:44 AM

Hi,

My idea was to deploy Geomesa or Rasterframes on Databricks in order to provide spatial capabilities to this platofrm. Finally, according to some advices in Rasterframes Gitter chat I selected the DBR 9.0 where I am installing pyrasterframes 0.10.0 via "pip" and no getting any errors.

I hope this info can be help.

Regards

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Databricks Model Registry Notification in Data Engineering

- SqlContext in DBR 14.3 in Data Engineering

- Read Structured Streaming state information in Warehousing & Analytics

- databricks-vectorsearch lib install in Machine Learning

- Container Service Docker images fail when a pip package is installed in Data Engineering