- 1073 Views

- 3 replies

- 3 kudos

We are building an machine learning application with feature store enabled. Once the model is built, we are trying to move the model artifacts and deploy it in azure ml as online endpoint. Does it possible to access the online store in azure ml endpo...

- 1073 Views

- 3 replies

- 3 kudos

Latest Reply

if you want databricks to use the feature store, which is in Cosmos DB, yes, it is possible https://learn.microsoft.com/en-us/azure/databricks/machine-learning/feature-store/online-feature-storessuppose you want to consume a future store in Databrick...

2 More Replies

- 1117 Views

- 4 replies

- 2 kudos

From a notebook I created a new feature store via:%sql

CREATE DATABASE IF NOT EXISTS feature_store_ebp;Within that feature store I fill my table with:feature_store_name = "feature_store_ebp.table_1"

try:

fs.write_table(

name=feature_stor...

- 1117 Views

- 4 replies

- 2 kudos

Latest Reply

What kind of runtime machine (version) do you use to run this code?

3 More Replies

- 8627 Views

- 8 replies

- 8 kudos

I was following the Databricks Academy "New Capabilities Overview: Feature Store" module. However when I try to run the code in the example notebook I get a security exception as explained below. When I try to run the example notebook "01-Populate a ...

- 8627 Views

- 8 replies

- 8 kudos

Latest Reply

Hi @Daniel Barrundia - please select "No isolation shared" Access mode, it should resolve this problem.

7 More Replies

- 682 Views

- 2 replies

- 3 kudos

To save computing resource and time, can I use streaming source in a batch mode (similar to Auto Loader) to update my feature store as my source table receives row update or is appended with new rows?

- 682 Views

- 2 replies

- 3 kudos

Latest Reply

yes you can schedule the job to process the data with auto loader

1 More Replies

- 5099 Views

- 13 replies

- 13 kudos

The documentation explains how to delete feature tables through the UI. Is it possible to do the same using the Python FeatureStoreClient? I cannot find anything in the docs: https://docs.databricks.com/_static/documents/feature-store-python-api-refe...

- 5099 Views

- 13 replies

- 13 kudos

Latest Reply

from databricks import feature_store

fs = feature_store.FeatureStoreClient()

fs.drop_table(FEATURE_TABLE_NAME)As of Databricks Runtime 10.5 for ML. Docs

12 More Replies

- 878 Views

- 1 replies

- 4 kudos

Where to find the best practices on MLOps on DatabricksWe recommend checking out the Big Book of MLOps for detailed guidance on MLOps best practices on Databricks including reference architectures.For a deep dive on the Databricks Feature store, we r...

- 878 Views

- 1 replies

- 4 kudos

Latest Reply

sher

Valued Contributor II

you can check here https://docs.databricks.com/machine-learning/mlops/mlops-workflow.html

- 2475 Views

- 7 replies

- 15 kudos

To leverage Databricks feature store, can only Python be utilized? If otherwise, what other language frameworks are supported. Below is my question in 2 partsPart 1) What languages can be utilized to write data frames as feature tables in the Feature...

- 2475 Views

- 7 replies

- 15 kudos

Latest Reply

you can use any of these languages Python, SQL, Scala and R

6 More Replies

- 1147 Views

- 1 replies

- 2 kudos

- 1147 Views

- 1 replies

- 2 kudos

Latest Reply

After much digging, observed i was using standard runtime. Once i switched to ML runtime of databricks, issue was resolved. To use Feature Store capability, ensure that you select a Databricks Runtime ML version from the Databricks Runtime Version dr...

- 1352 Views

- 2 replies

- 4 kudos

Hello ,I am working with lookupEngine functions. However, we have some feature tables with granularity level most detailled of dataframe input.Please find an example :table A with unique keys on two features : numero_p, numero_s So while performing F...

- 1352 Views

- 2 replies

- 4 kudos

Latest Reply

Hi @SERET Nathalie , I can check internally on the ask here. In the meantime please let us know if this helps: https://docs.databricks.com/machine-learning/feature-store/feature-tables.htmlhttps://docs.databricks.com/machine-learning/feature-store/i...

1 More Replies

by

Cirsa

• New Contributor II

- 2074 Views

- 3 replies

- 2 kudos

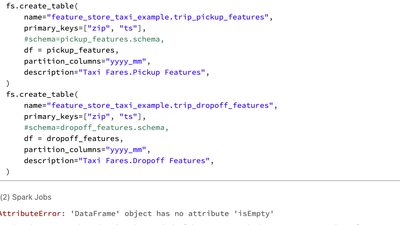

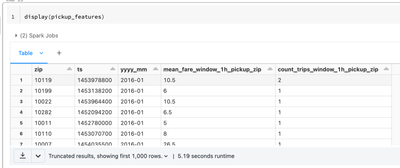

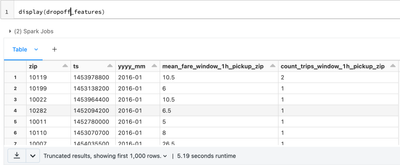

Hi,When trying to create the first table in the Feature Store i get a message: ''DataFrame' object has no attribute 'isEmpty'... but it is not. So I cannot use the function: feature_store.create_table()With this code you should be able to reproduce t...

- 2074 Views

- 3 replies

- 2 kudos

Latest Reply

@Hubert DudekSry about the 'df_train', I forgot to change it (the error I commented is real with the proper DF). Changing the DBR to 11.3 LTS solved the problem. Thanks!

2 More Replies

- 4145 Views

- 5 replies

- 4 kudos

Hi All, We're currently considering turning on Unity Catalog but before we flick the switch I'm hoping I can get a bit more confidence of what will happen with our existing dbfs mounts and feature store. The bit that makes me nervous is the crede...

- 4145 Views

- 5 replies

- 4 kudos

Latest Reply

@Ashley Betts can you please check below article, as far as i know we can use external mount points by configuring storage credentials in unity catalog . default method is managed tables, but we can point external tables also. 1. you can upgrade exi...

4 More Replies

- 1026 Views

- 3 replies

- 0 kudos

from databricks import feature_storeI am trying to import feature_store but it is showing this error.ImportError: cannot import name 'feature_store' from 'databricks' (/databricks/python/lib/python3.8/site-packages/databricks/__init__.py)

- 1026 Views

- 3 replies

- 0 kudos

Latest Reply

Is this issue resolved completely? We are facing the same problem. this might help.

2 More Replies

- 828 Views

- 1 replies

- 3 kudos

I am working with feature store to save the engineered features. However, for the specific case we have lots of feature table and lot of separate target variables on which we want to train separate models. Now for each of these model, we can leverage...

- 828 Views

- 1 replies

- 3 kudos

Latest Reply

Thanks for taking the time to let us know how to make Databricks even better! @Mayank Srivastava I love that you included a real-life example as well. I think I know the right PM at Databricks that will be interested in this input. Thanks again for...

- 1585 Views

- 2 replies

- 3 kudos

the following code...from pyspark.sql.functions import monotonically_increasing_id, lit, expr, randimport uuidfrom databricks import feature_storefrom pyspark.sql.types import StringType, DoubleTypefrom databricks.feature_store import feature_table, ...

- 1585 Views

- 2 replies

- 3 kudos

Latest Reply

Hope that was an easy fix - @Tobias Cortese ! Thanks for marking the "best answer"!

1 More Replies

by

vives

• New Contributor II

- 1795 Views

- 3 replies

- 0 kudos

It seems that the current method log_model from the FeatureStoreClient class lacks a way to pass in the model signature (as opposed as doing it through mlflow directly). Is there a workaround to append this information? Thanks!

- 1795 Views

- 3 replies

- 0 kudos

Latest Reply

Hello!You can log a model with a signature by passing a signature object as an argument with your log_model call. Please see here.Here's an example of this in action in a databricks notebook.Hope that helps!-Amir

2 More Replies