- 4698 Views

- 2 replies

- 1 kudos

- 1 kudos

Databricks pool concept may help reduce cluster start up time. more details are here

- 1 kudos

Databricks pool concept may help reduce cluster start up time. more details are here

If you're looking for python packages, you can use standard conda list commands (see example below):%conda list

There shouldn't be. Generally speaking, models will be serialized according to their 'native' format for well-known libraries like Tensorflow, xgboost, sklearn, etc. Custom model will be saved with pickle. The files exist on distributed storage as ar...

Why not just directly deploy the model where you need it in production?

The Model Registry is mostly a workflow tool. It helps 'gate' the process, so that (for example) only authorized users can set a model to be the newest Production version of a model - that's not something just anyone should be able to do!The Registry...

Wondering if it always makes sense or if there are some situations where you might only want to run optimize

Its good idea to optimize at end of each batch job to avoid any small files situation, Z order is optional and can be applied on few non partition columns which are used frequently in read operationsZORDER BY -> Colocate column information in the sam...

In this scenario, the best option would be to have a single readStream reading a source delta table. Since checkpoint logs are controlled when writing to delta tables you would be able to maintain separate logs for each of your writeStreams. I would...

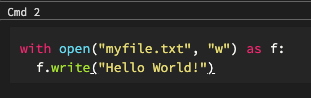

I ran the code block below and now I can't find the file. Where would this get saved since no location was specified?

In this case, you are just writing a file to the working directory of the driver process. It'll be under something like /home/[user] on the local file system. Anything you write to "/dbfs/..." however goes to distributed storage, even though it looks...

What are the major changes released in spark 3.0

Check out https://spark.apache.org/docs/latest/sql-migration-guide.html if you're looking for potentially breaking changes you need to be aware of, for any version.For a general overview of the new features, see https://databricks.com/blog/2020/06/18...

There are in principle four distinct ways of using parallelisation when doing machine learning. Any combination of these can speed up the whole pipeline significantly.1) Using spark distributed processing in feature engineering 2) When the data set...

Good summary! yes those are the main strategies I can think of.

You do not have to cache anything to make it work. You would decide that based on whether you want to spend memory/storage to avoid recomputing the DataFrame, like when you may use it in multiple operations afterwards.

I have heard people talk about SparkML but when reading documentation it talks about MLlib. I don't understand the difference, could anyone help me understand this?

They're not really different. Before DataFrames in Spark, older implementations of ML algorithms build on the RDD API. This is generally called "Spark MLlib". After DataFrames, some newer implementations were added as wrappers on top of the old ones ...

You could set up dnsmasq to configure routing between your Databricks workspace and your on-premise network. More details here

Use the Databricks CLI: https://docs.databricks.com/dev-tools/cli/index.htmldatabricks fs cp dbfs:/remote/path /local/path

Databricks allows network customizations / hardening from a security point of view to reduce risks like Data exfiltration. For more detailsData Exfiltration Protection With Databricks on AWSData Exfiltration Protection with Azure Databricks

What are the best practices around Z ordering, Should be include as Manu column as Possible in Z order or lesser the better and why?

With Z-order and Hilbert curves, the effectiveness of clustering decreases with each column added - so you'd want to zorder only the columns that you's actually use so that it's speed up your workloads.

| User | Count |

|---|---|

| 1601 | |

| 736 | |

| 343 | |

| 284 | |

| 246 |