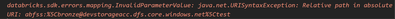

databricks-connect, dbutils, abfss path, URISyntaxException

When trying to use `dbutils.fs.cp` in the #databricks-connect #databricks-connect context to upload files to Azure Datalake Gen2 I get a malformed URI errorI have used the code provided here:https://learn.microsoft.com/en-gb/azure/databricks/dev-tool...

- 1631 Views

- 3 replies

- 1 kudos

- 1 kudos

Hi @KrzysztofPrzyso, It appears that you’re encountering an issue with relative paths in absolute URIs when using dbutils.fs.cp in the context of Databricks Connect to upload files to Azure Data Lake Gen2. Let’s break down the problem and explore po...

- 1 kudos