Summit

Having a great time at the data summit! It is an amazing experience and is organized very well! #databricks

- 214 Views

- 0 replies

- 0 kudos

Having a great time at the data summit! It is an amazing experience and is organized very well! #databricks

I have 2 datasets getting loaded into a common silver table. These are event driven and notebooks are triggered when a file is dropped into the storage account. When the files come in at the same time, one dataset fails with concurrent append excepti...

Databricks provides ACID guarantees since the inception of Delta format. In order to ensure the C - Consistency is addressed, it limits concurrent workflows to perform updates at the same time, like other ACID compliant SQL engines. The key differenc...

Could someone point me at right direction to deploy Jobs from one workspace to other workspace using josn file in Devops CI/CD pipeline? Thanks in advance.

Your are welcome. There was a feature that databricks released to linked the workflow definition to the GIT automatically. Please refer the link below,https://www.databricks.com/blog/2022/06/21/build-reliable-production-data-and-ml-pipelines-with-git...

No difference, both are very similar only difference is that in the SQL editor you cannot take advantage of things like SQL serverless clusters, better visibility of data objects and a more simplified exploration tooling. SQL in notebooks mostly run ...

What's the effort involved with converting a databricks standard workspace to a premium? Is it 1 click of a button? Or are there other considerations you need to think about?

It’s pretty easy! You can use the Azure API or the CLI. To upgrade, use the Azure Databricks workspace creation API to recreate the workspace with exactly the same parameters as the Standard workspace, specifying the sku property as Premium. To use t...

I'm trying to download a PDF file and store it in FileStore using this code in a Notebook: with open('/dbfs/FileStore/file.pdf', 'wb') as f: f.write(requests.get('https://url.com/file.pdf').content) But I'm getting this error:FileNotFoundError: [...

Hello,I'm exercising a migration of an azure delta table (10TB) from Azure Standard performance tier to Azure Premium. The plan is to create a new storage account and copy the table into it. Then we will switch to the new table. The table contains r...

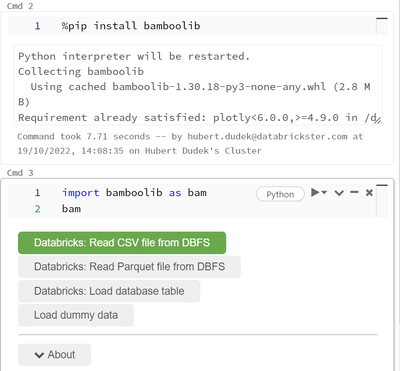

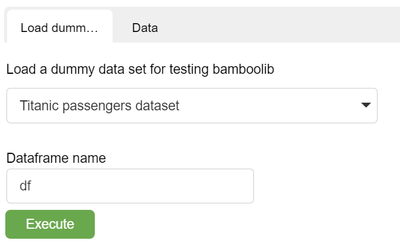

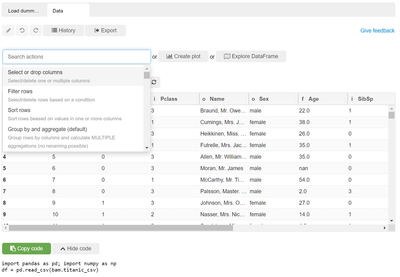

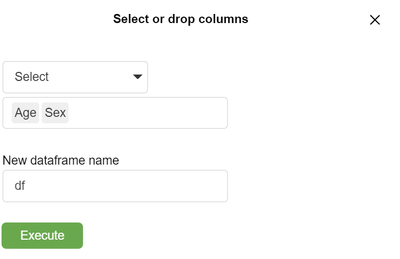

Bamboolib with databricks, low-code programming is now available on #databricksNow you can prepare your databricks code without ... coding. Low code solution is now available on Databricks. Install and import bamboolib to start (require a version of ...

I have tried to load parquet file using bamboolib menu, and getting below error that path does not existI can load the same file without no problem using spark or pandas using following pathciti_pdf = pd.read_parquet(f'/dbfs/mnt/orbify-sales-raw/Wide...

help us to understand how integrate third party app such as M365 to databricks

Try using "partner connect" API by databricks. They have manual connect and automatic connect options for this. databricks have individual partner connect api for each third application. Check if M365 supports this type of integration with databricks...

Can I use SQL to create a table and set and expiration date? So that the table will be deleted automatically after the expiration date.

Right now it i not possible. You can always create a custom trigger solution with parameter to call notebook and delete the table.

Props to the training team for the Data Engineering track at AI Summit, learned a ton and got some great experience!

YES. You can use this script to do it%run ./path_to_other_notebook

We are planning to load 2 million plus jsons into prod. How should we plan for any intermittent failures and complete loads without performance issues.

This is Rajani

| User | Count |

|---|---|

| 1601 | |

| 736 | |

| 343 | |

| 284 | |

| 247 |