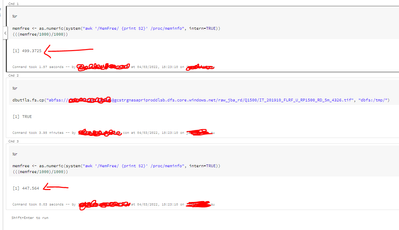

Higher Order Function: AGGREGATE not working in the example notebook mentioned in Documentation

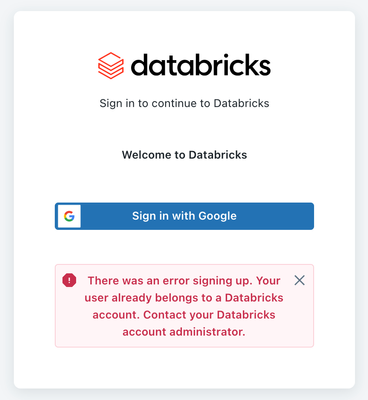

Hi All,I am running a sample notebook from Databricks Documentation section on Higher Order Function on my community edition. I am running this notebook on DBR 12.2 LTS.Databricks Documentation URL : https://docs.databricks.com/optimizations/higher-o...

- 641 Views

- 0 replies

- 0 kudos